Optimizing the GPU kernel

1. Reduction to a single value

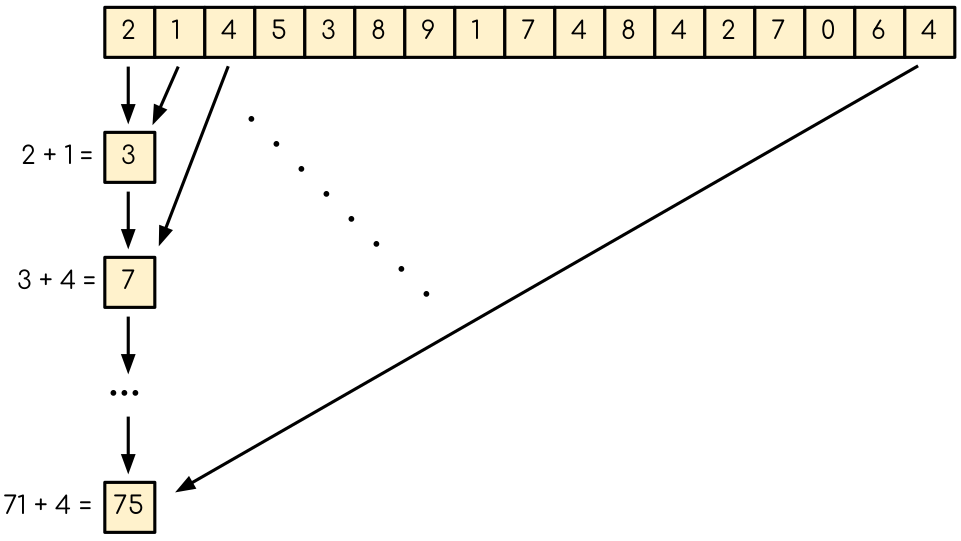

In this part of the workshop, we are going to investigate how the individual kernel can take advantage of all the features provided by GPU. As an example, we are going to use the reduction problem. Consider a vector of N elements that contains floating point numbers from 0 to 1. The task is to compute the sum of all the elements in the vector. The basic approach in a serial code is to designate a single floating point variable and add elements to it one by one in a loop. Thus, on each iteration, one floating point number will be added to accumulating value, as indicated on the figure below.

A reduction of an array to a single value. Each iteration (unfolded on the Figure) takes one element of the vector and adds it to the accumulated value.

On a GPU, many threads are running simultaneously. This means that simple approach of adding values to a single number will not work out of the box — we simply don’t know which of the threads will be adding respective value at a given time. In case two threads are trying to add to the value simultaneously, we can encounter a problem called race condition. In particular, if thread number one tries to make a binary addition, it should first read the accumulated value. If, at the same time, thread number two does the same thing, it will first read the value as well. In case this happens before the first thread saves the data, the result from the first thread will overwrite the value and the addition from the thread one will be lost. To avoid race conditions, the summation has to be done in a thread-safe fashion. Likely, CUDA provides atomic functions, that ensures that all the operations are done thread-safe. Atomic functions have the following signature:

__device__ float atomicAdd(float* address, float val)

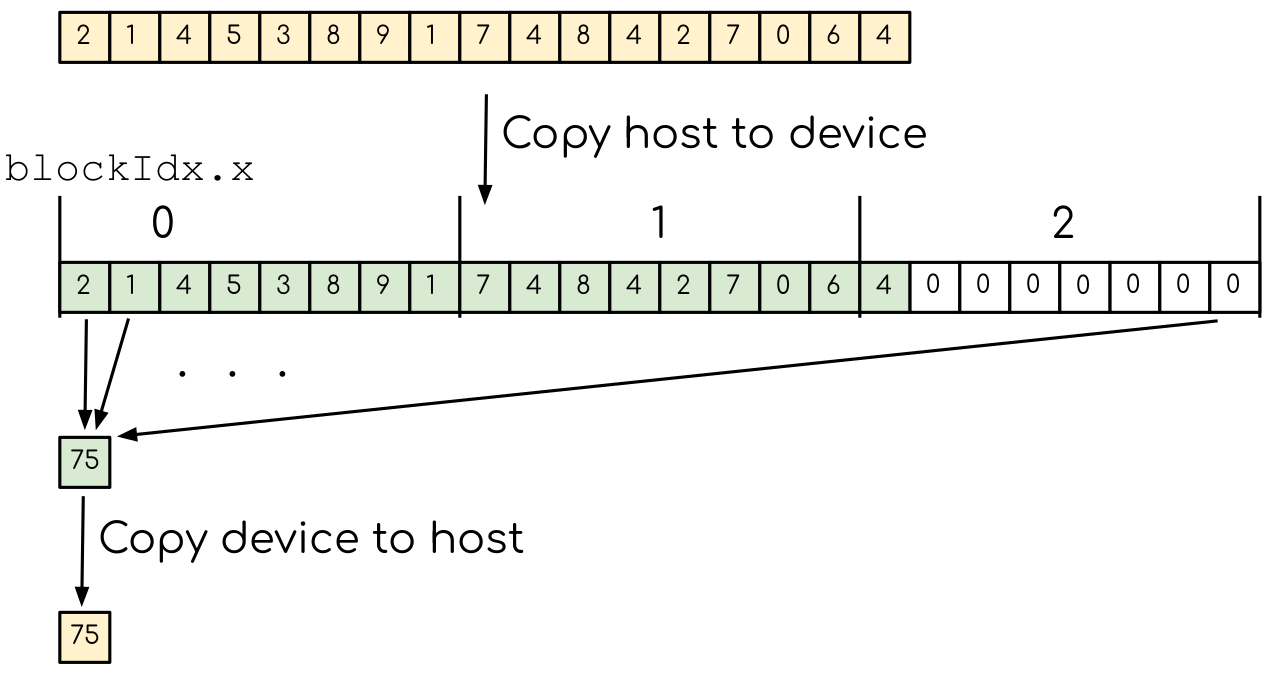

These can only be called from the device, where the application runs in many threads. The function takes the address, where the data will be atomically added and a value to add. Note, that the atomic operations can be called on a system level, device level and thread block level and these will result in different performance. Let us first adopt a simple CPU code that accumulates values to a single variable to run on a GPU. Note that this approach is not desirable both from correctness and performance standpoints, as we will see later. The figure below illustrates how the CPU algorithm can be adopted to run on a GPU with atomic operations. Since the number of threads on a GPU is a multiple by a block size, some threads may try to access the elements that are out of bonds. So either extra condition should be added ot the array should be extended by zeroes, as shown on the figure.

A reduction of an array to a single value on a GPU. Data is copied to the GPU memory, where each thread adds one element to the accumulated value. Note that the thread-safe atomic operations have to be used in order to ensure that there are no race conditions. Many threads will run simultaneously on a GPU, so there is no need for a loop over the indices.

Basic reduction code

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float result = 0.0;

// Timing

clock_t start = clock();

// Main loop

for (int i = 0; i < numElements; i++)

{

result = result + data[i];

}

// Timing

clock_t finish = clock();

printf("The result is: %f\n", result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(data);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

int i = threadIdx.x + blockIdx.x*blockDim.x;

if (i < numElements)

{

atomicAdd(result, data[i]);

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/BLOCK_SIZE + 1;

float* d_result;

cudaMalloc((void**)&d_result, 1*sizeof(float));

cudaMemset(d_result, 0.0, 1);

// Timing

clock_t start = clock();

// Call the reduction kernel

reduce_kernel<<<numBlocks, threadsPerBlock>>>(d_data, d_result, numElements);

cudaMemcpy(&h_result, d_result, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result);

return 0;

}

Converty the C++ code to CUDA using atomic operations.

To compile the CPU code, type:

gcc reduction_cpu_1.cpp -o reduction_cpu_1

Change the extension to .cu so that nvcc will understand that the code should be compiled for a GPU.

Allocate the device buffer for input data and a single-float buffer for the result. Copy data to the GPU. Make sure that the value you will be accumulating to is zero:

cudaMalloc(..)function does not set values to zero. This can be done by copying zero from the CPU memory withcudaMemcpy(..)or by thecudaMemset(..)function that sets the desired value to the provided address:__host__ cudaError_t cudaMemset(void* devPtr, int value, size_t count)

Create the CUDA kernel that will use

atomicAdd(..)to accumulate the data.Call the kernel in appropriate number of blocks. Remember that the total number of elements in array can be arbitrary and non-divisible by the size of a single block. Make sure that the array index does not go out of bonds within the kernel.

Copy the result back to the CPU.

To compile the GPU code, use:

nvcc reduction_gpu_1.cu -o reduction_gpu_1

Before we start optimizing the GPU code, we need to fix one big problem with our approach: on both CPU and GPU, the sum becomes invalid for arrays of large size. Indeed, we are summing random values between 0 and 1. If the number of these values is large enough, the sum should be approximately half of the number of the elements. But running the code for \(10^8\) elements results in a number is significantly lower.

Why the number is significantly lower than expected for large vectors? How can one fix this?

Try running the progrem for 100000000 elements. What is the expected reduction value? Compare it with what you are getting.

Solution

Even though the numbers we are summing up have similar value (from 0 to 1), we are accumulating them to a single precision floating point number. The sum in this number becomes large and at some point we are adding small number to a big number. The floating point numbers are stored as a set of significant digits and an exponent. When adding them up, the exponent has to be equalized. The significant numbers in the small number are then shifted to match the exponent of the big number. When the significant numbers run out, it becomes zero. For instance, \(0.5=0.500*10^1=0.050*10^2=0.005*10^3=0.000*10^4\). The number of significant digits for single precision floating point is about 8 in decimal arithmetic. So, when we are adding about \(10^8\) numbers of approximately the same value, their values will be lost. The easiest way to solve this problem is to use double precision for accumulated value. Double precision has about 15 significant digits in decimal arithmetic. However, more robust approach would be to do the summation by pairs, as illustrated on the figure below.

There is another problem with the GPU code as well. The reduction is running in many threads that all access the same location in the memory atomically. One should expect a huge queue of threads trying to save their data. The good thing that solving the first problem helps us to solves the second one, as we will see below.

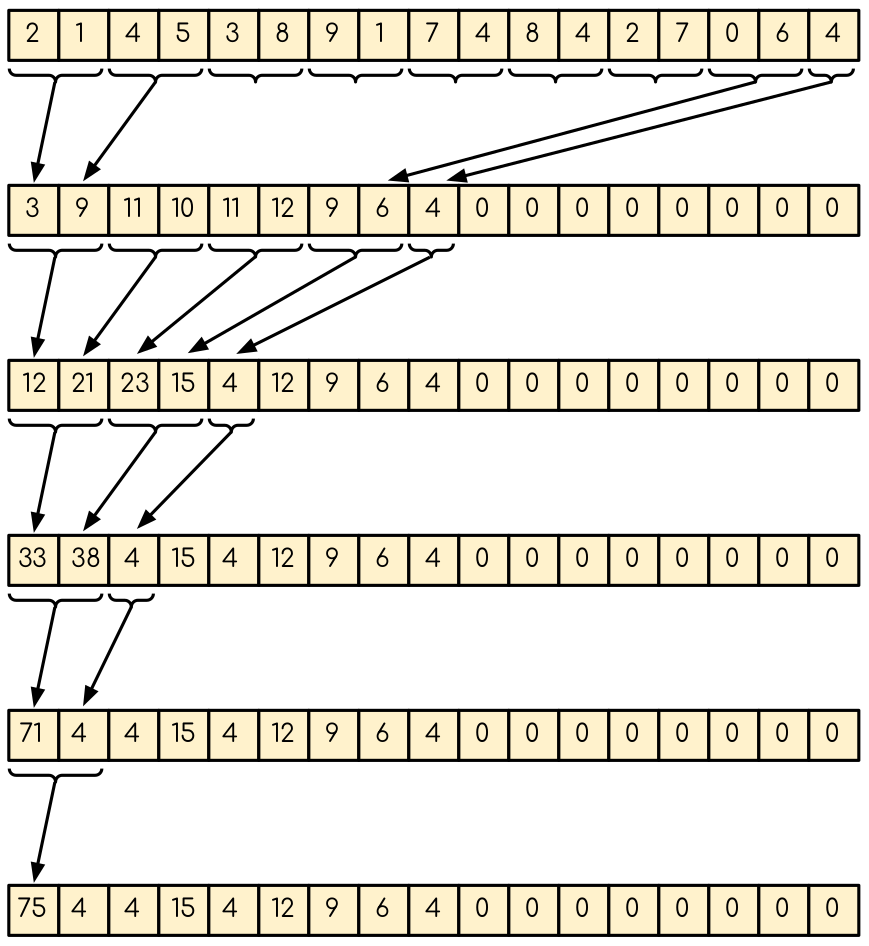

2. Pair-wise reduction

Let us first fix the CPU code, so that the result will be correct for larger arrays. The figure below shows one of the options how the correctness can be fixed even for large arrays. The idea is to make sure that only numbers of similar value are added together. This can be done by summing the elements by pairs. These binary sum should be of similar value as well, so the procedure can be repeated until the final value is obtained.

A pair-wise reduction algorithm on a CPU. The array is split into pairs, which are added together, resulting with the array half a size. The procedure is then repeated until all the values are added.

Let us fix the CPU code with the approach described by the figure above.

Fix the accuracy for large number of elements

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float result = 0.0;

// Timing

clock_t start = clock();

// Main loop

for (int i = 0; i < numElements; i++)

{

result = result + data[i];

}

// Timing

clock_t finish = clock();

printf("The result is: %f\n", result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(data);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

void reduce(const float* data, float* result, int numElements)

{

for (int i = 0; i < numElements/2; i++)

{

result[i] = data[2*i] + data[2*i + 1];

}

if (numElements % 2 != 0)

{

result[0] += data[numElements-1];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float* result = (float*)calloc(numElements, sizeof(float));

// Timing

clock_t start = clock();

reduce(data, result, numElements);

// Main loop

for (int numElementsCurrent = numElements/2; numElementsCurrent > 1; numElementsCurrent /= 2)

{

reduce(result, result, numElementsCurrent);

}

// Timing

clock_t finish = clock();

printf("The result is: %f\n", result[0]);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(data);

free(result);

return 0;

}

Since, we are doing the reduction one element at a time, we will now need an array to hold the reduction results.

Create a reduce function that will take an input array, do the pair-wise addition and save the results. This function will half the number of the elements to reduce, hence it should be called many times, until the final value is obtained. Since the elements are computed sequentially, one does not need to worry about overwriting the data that was not yes used: the input index will be always ahead of the output index. Hence there is no need in separate data array for the intermediate results: the pair-wise added values can be saved into the same array used for input.

As long as the number of elements is even, we are fine. But in case it is odd, we need to deal with the last element of the array separately. The easiest way to solve this problem is to add the last element to the first element of the sum in case the array has odd number of values.

Construct a loop that will call the reduction function many times, until the reduction size converges to 1.

Compile and run the code. Make sure it produces the right result with large number of elements in the array (i.e. with \(N>10^8\)).

Having this CPU version gives us a reference that can be handy while adapting the GPU code.

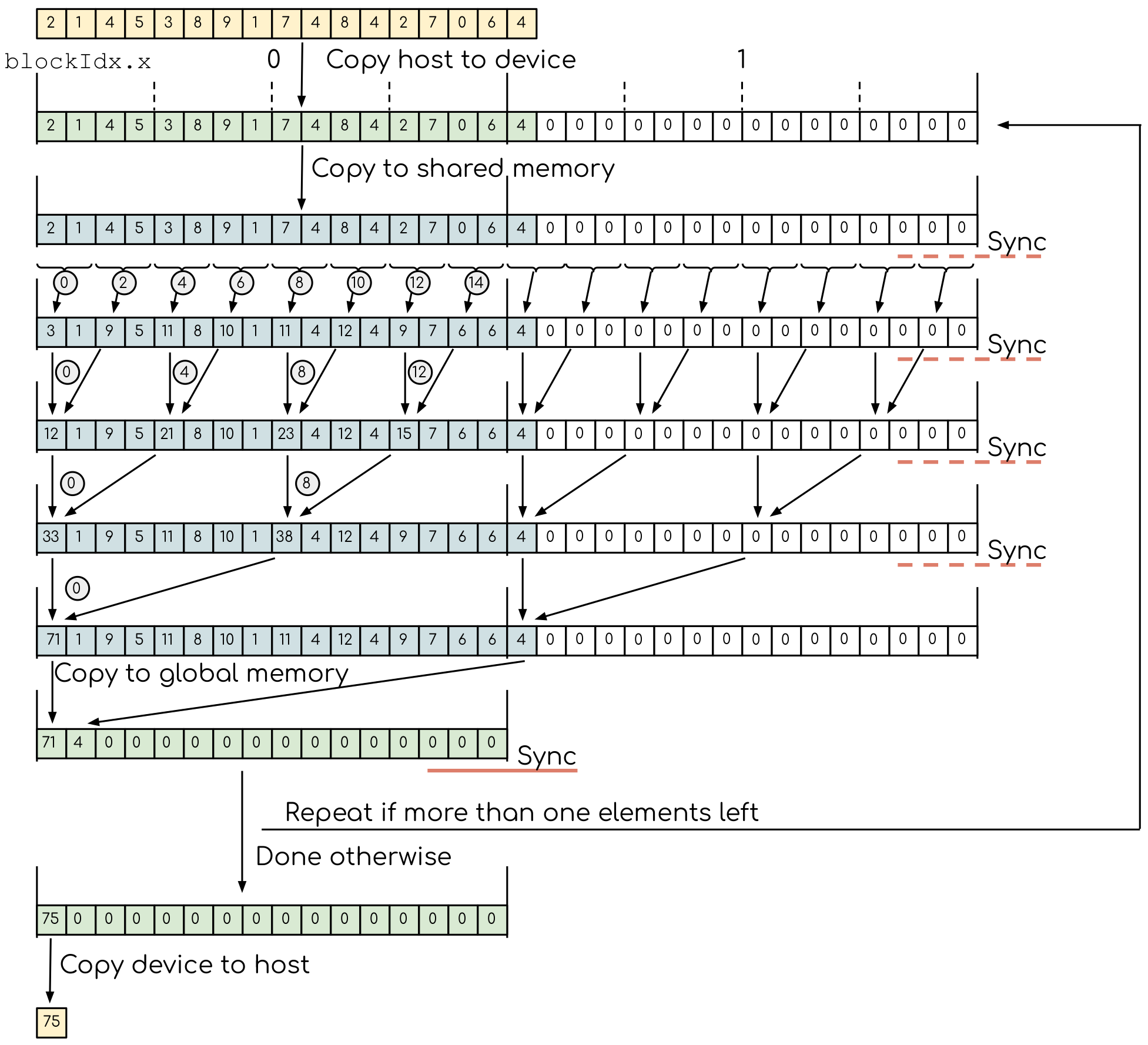

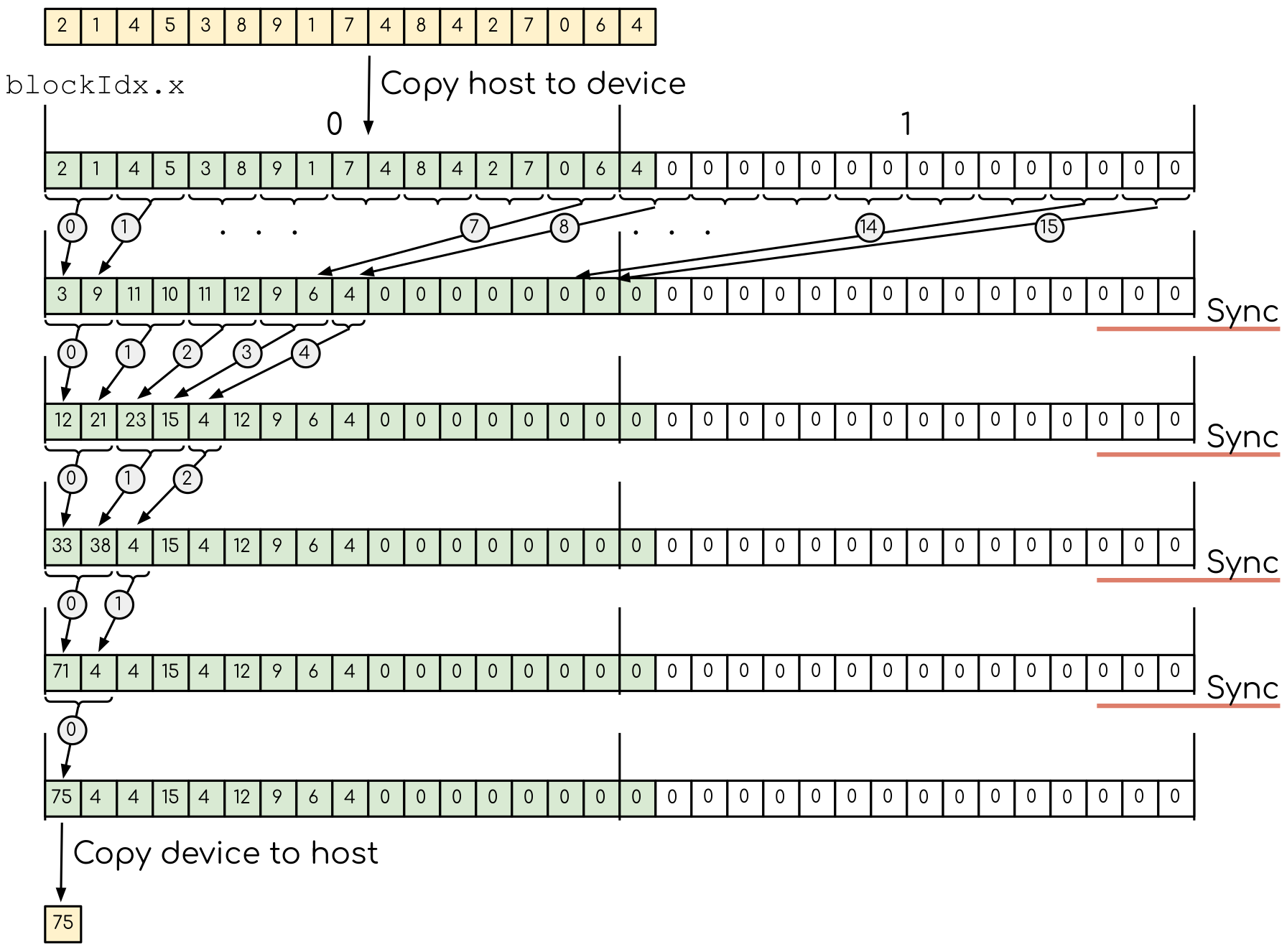

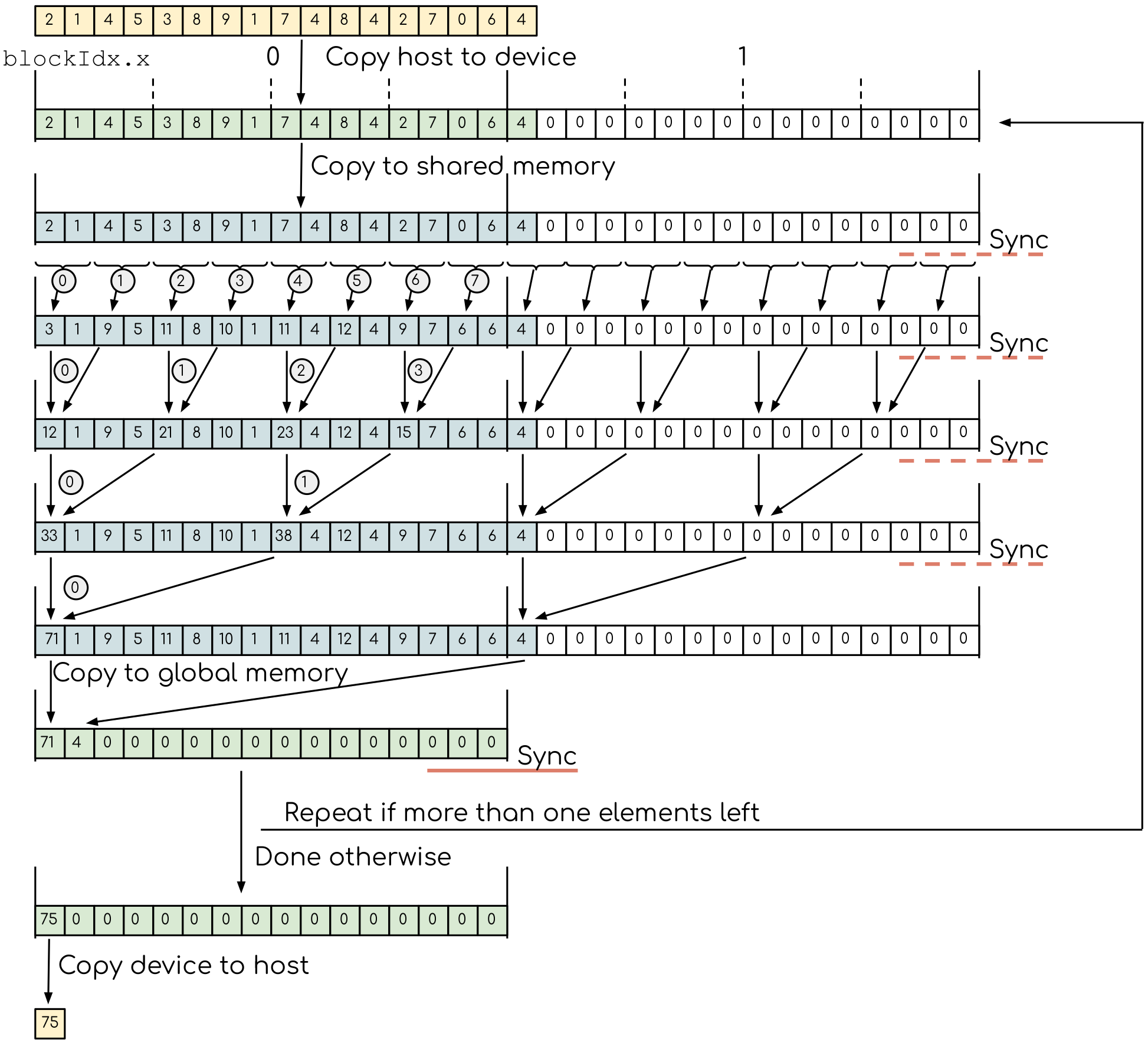

Maping pair-style addition algorithm to CUDA. Each kernel call does one binary addition per GPU thread. The execution is than returned to the CPU so that all the threads are in-sync. The kernel is called again with the new array as an input. This continues untill only one element is left. The numbers in circles indicate which thread does the specific operation. The values that are out of bonds are set to zeroes to make sure that all threads get the data.

Let us use the same approach to fix the GPU code.

Fix the accuracy for large number of elements

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

void reduce(const float* data, float* result, int numElements)

{

for (int i = 0; i < numElements/2; i++)

{

result[i] = data[2*i] + data[2*i + 1];

}

if (numElements % 2 != 0)

{

result[0] += data[numElements-1];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float* result = (float*)calloc(numElements, sizeof(float));

// Timing

clock_t start = clock();

reduce(data, result, numElements);

// Main loop

for (int numElementsCurrent = numElements/2; numElementsCurrent > 1; numElementsCurrent /= 2)

{

reduce(result, result, numElementsCurrent);

}

// Timing

clock_t finish = clock();

printf("The result is: %f\n", result[0]);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(data);

free(result);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

int d_i = threadIdx.x + blockIdx.x*blockDim.x;

result[d_i] = getValue(data, 2*d_i, numElements) + getValue(data, 2*d_i + 1, numElements);

if (d_i == 0 && numElements % 2 != 0)

{

result[d_i] += data[numElements-1];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/2/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numElements*sizeof(float));

cudaMalloc((void**)&d_result2, numElements*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numElements/2; numElementsCurrent > 1; numElementsCurrent = numElementsCurrent/2)

{

int numBlocksCurrent = numElementsCurrent/2/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock>>>(d_result1, d_result2, numElementsCurrent);

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

Change the extension of the file to

.cuso that thenvccexpects GPU code in it.Create a device-side array for the input and copy the data.

Contrary to the CPU, the execution on a GPU will not be sequential. This can cause problem if we use the same array for both input and output. Hence, we will create two separate arrays for the output and swap them from one reduction call to the other.

Change the reduction function call to the kernel calls. Make sure that you recompute the number of blocks value as the reduction array becomes smaller.

Since the number of threads on the GPU is a multiple of the block size, it is convenient to create a helper function that will return the element of the array if it is in bonds and zero otherwise. This function should have

__device__specifier. To ensure that having this in a separate function does not affect the performance, we can ask the compiler to inline ib by adding a__forceinline__specifier:__device__ __forceinline__ float getValue(const float* data, int index, int numElements) { if(index < numElements) { return data[index]; } else { return 0.0f; } }

Change the reduction function from CPU reduction code into a kernel. The loop can now be removed with the thread index replacing the loop index. This can go out of bonds, so use the helper function that we created to get the input elements. The last element in case their number is odd should be dealt with only once, so we can designate the first thread to do it (i.e. the thread with index 0).

Compile the code with

nvcccompiler. Run it with arrays of large size to make sure that the resuls are correct.

Now we ensured that the result is correct. Also note, that the performance of the new implementation is quite a lot better: we got rid of the bottleneck of many threads writing to the same memory address simultaneously. In many cases, this first round of optimization would be sufficient for the GPU to outperform CPU. However, there is still huge room for improvement in terms of the performance.

4. Reduce thread divergency

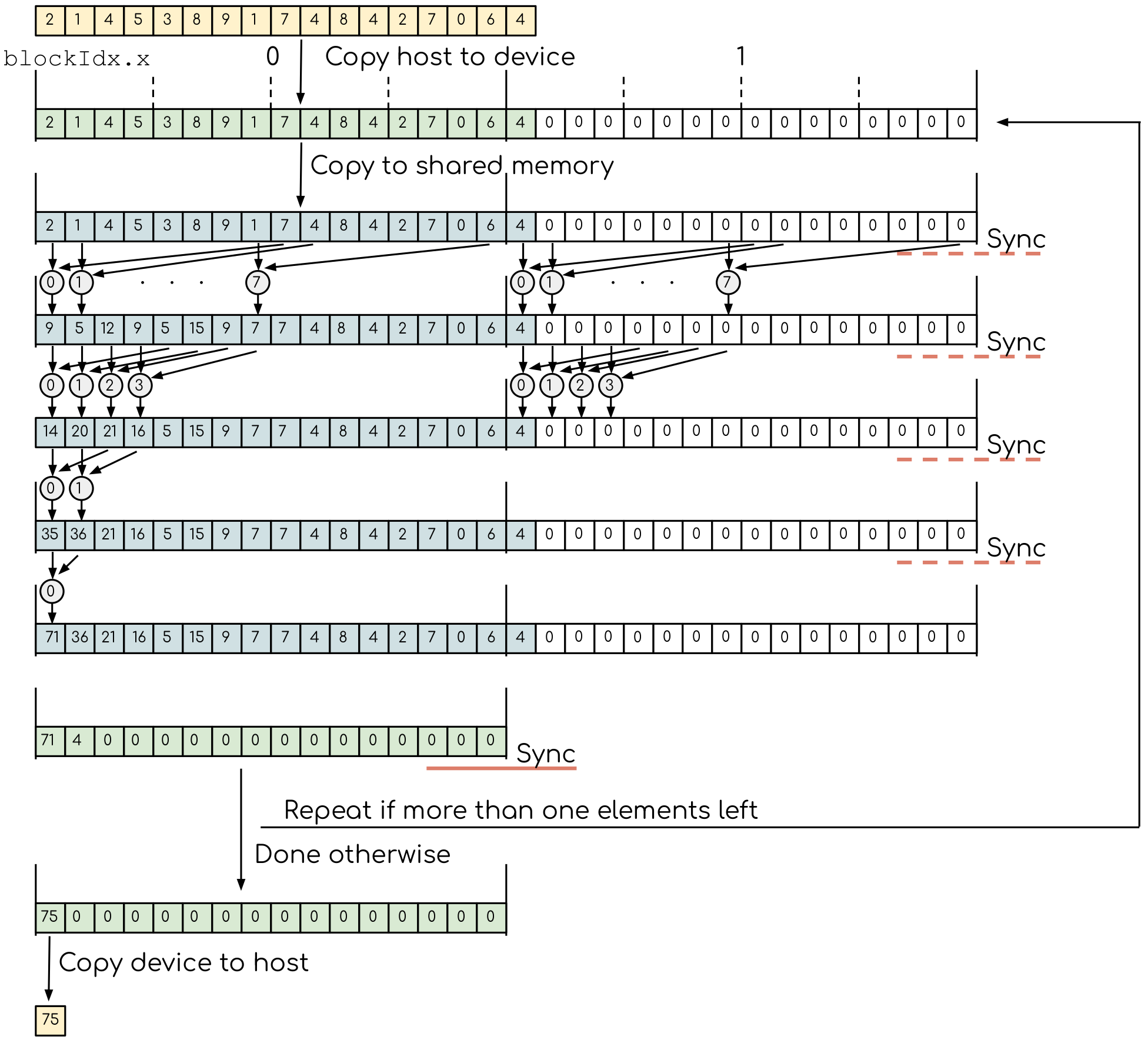

In the previous call, we ask the thread that correspond to the value that is reduced to do the work. This is not effective on a GPU: neighboring threads will diverge from one onother. Next optimization step will be fixing that. Let us try to modify the code so that the first threads in the block do the reduction.

This figure may look similar to the one before. But have a look on the numbers in the gray circles. They are the number of threads that do the reduction. As one can see, they are now sequential, meaning that neighboring threads will more likely to take the same path in the conditionals. This is espetially important for the threads within one warp, where both paths are taken in case the divergence occurs.

Reduce thread divergency

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements);

for (int offset = 1; offset < blockDim.x; offset *= 2)

{

__syncthreads();

if (s_i % (2*offset) == 0)

{

s_data[s_i] += s_data[s_i + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements);

for (int offset = 1; offset < blockDim.x; offset *= 2)

{

__syncthreads();

int index = 2 * offset * s_i;

if (index < blockDim.x)

{

s_data[index] += s_data[index + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

Change the thread indexing where to make sure that first threads are doing the reduction. This is easier to do if one compute the index of the reduced value from the thread index.

5. Sequential memory access

Now, the cosequent threads do the work, we can address another issue with the code: memory access pattern. Even though GPU has relatively fast memory bus, it is utilized by many threads simultaneously. To add to the problem, the cache size is small relative to the CPU — GPUs are design to pack as many cores as possible, thus less transistors are left for the local memory. This makes the memory access pattern one of the most important thing when optimizing the kernels.

Let us change the kernel so that the sequential GPU threads read the sequential memory addresses. Since two values are added at a time, they will be separated by the offset that is large enough to accommodate other threads. This means that the shared memory array should be split into two parts at each iterations: one for the first values for all the threads, the other is for the second. The offset, or separation value, will be reduced from one iteration to the other with less values to reduce.

A scheme for the algorithm, where the memory is accessed sequentially. At each iteration the reduced values are split into two equal parts which are read sequentially by sequential threads. With less values left to reduced, the offset decreases, until it is equal to one for the last pair. Note that all the relevant values are kept at the beginning of the array, thus the data read is less scattered.

Sequential memory access

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements);

for (int offset = 1; offset < blockDim.x; offset *= 2)

{

__syncthreads();

int index = 2 * offset * s_i;

if (index < blockDim.x)

{

s_data[index] += s_data[index + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements);

for (int offset = blockDim.x / 2; offset > 0; offset >>= 1)

{

__syncthreads();

if (s_i < offset)

{

s_data[s_i] += s_data[s_i + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

Change the loop over the offset values so that the offset goes from hald of the block size to 1. To get the block size, one can use

blockDim.xvariable.`Make sure that the each working thread reads the value that corresponds to it and adds the one with the current ofset from it.

6. Load two values at a time

At the very first iteration, the half of the threads are not doing any reduction. The only thing that the second half of the threads are doing is loading the data into the shared memory. This can be easily fixed by loading two numbers in each thread and reducing them before saving to the shared memory. In this case all threads will have some computations to do and less resources will be wasted.

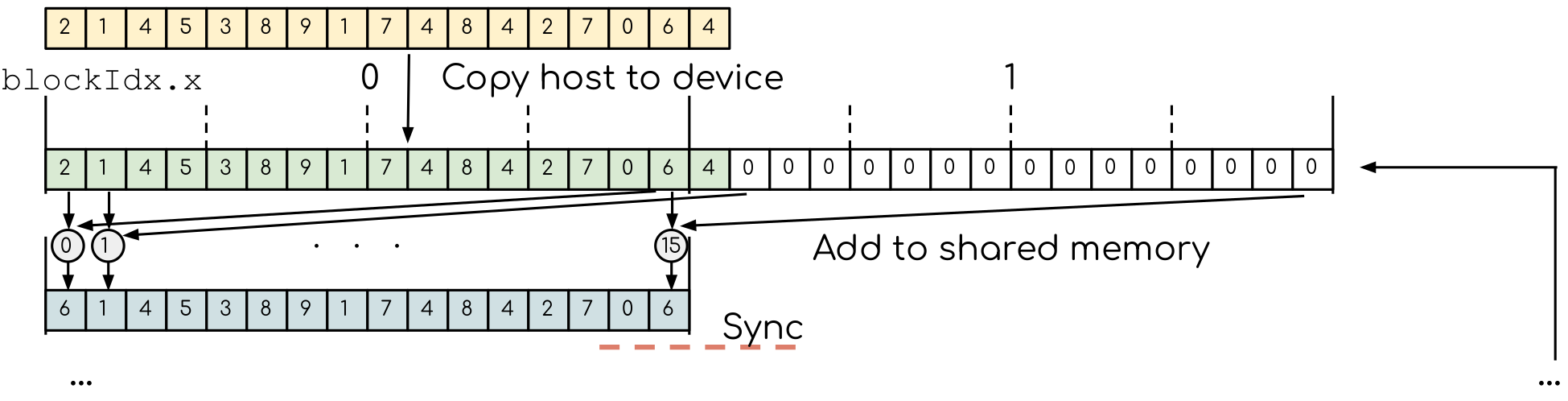

Only part of the algorithm that needs changing is shown. Each thread now takes two values from the global memory and reduce it immediately to the respective location in shared memory.

Load two values at a time

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements);

for (int offset = blockDim.x / 2; offset > 0; offset >>= 1)

{

__syncthreads();

if (s_i < offset)

{

s_data[s_i] += s_data[s_i + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*2*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements) + getValue(data, d_i + blockDim.x, numElements);

for (int offset = blockDim.x / 2; offset > 0; offset >>= 1)

{

__syncthreads();

if (s_i < offset)

{

s_data[s_i] += s_data[s_i + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/2/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/2/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

Change the part of the code where the values are saved to the shared memory so that two values are read simultaneously and the first pairwise reduction is done.

Only half as many thread blocks are now needed, so the kernel launch configuration and loop over the kernel launches should be changed accordingly.

7. Unroll the last warp

The GPUs are often refereed to having Single Instruction Multiple Threads (SIMT) architecture. This is to separate them from Single Instruction Multiple Data (SIMD) devices. The main difference is that different threads can execute different instructions. However, this is only true, when the threads in question are outside the same warp. Warp is a unit of threads that executes the same instructions for all the threads. In a warp any thread divergence will take both paths in every thread even when only one of them will take an alternative path. On NVidia GPUs, the warp is a unit of 32 threads, which means that when we get to that many threads, special care should be taken to make sure that there is no divergence. In fact, even checking for the conditional will slow the execution down. The good thing is that, inside the warp, all the threads do the same operation at the same time, which can be used to remove explicit synchronization calls.

In our code, we slowly reduce the number of active threads from the block width to 2 on the last iteration. When the number of active threads reaches the size of warp, all the active threads are within the same warp and we can manually unroll the last iterations. While doing so, we will ask all the threads to do the reduction, not only those that produce the numbers needed at the next iteration. It may look like we are asking the GPU to do extra work, but, in fact, we are removing extra conditional checks. Indeed, the inactive threads wold have taken diferent path where they do nothing. But since there are the threads that actually do the work, the inactive threads will idle while this is happening since they are in the same warp.

Last warp reduction for a warp of size 4 (indicated by dashed lines). Only the changed part of the algorithm is shown. Every thread computes the binary reduction at each interaction, which allows one to remove the conditional. Even though this leads to computing values that are not used, the reduction in thread divergence inside a warp will give better performance.

Unroll the last warp

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*2*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements) + getValue(data, d_i + blockDim.x, numElements);

for (int offset = blockDim.x / 2; offset > 0; offset >>= 1)

{

__syncthreads();

if (s_i < offset)

{

s_data[s_i] += s_data[s_i + offset];

}

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/2/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/2/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

#include <stdio.h>

#include <stdlib.h>

#include <algorithm>

#include <time.h>

#define BLOCK_SIZE 256

__device__ __forceinline__ float getValue(const float* data, int index, int numElements)

{

if(index < numElements)

{

return data[index];

}

else

{

return 0.0f;

}

}

__device__ void warpReduce(volatile float* sdata, int s_i)

{

sdata[s_i] += sdata[s_i + 32];

sdata[s_i] += sdata[s_i + 16];

sdata[s_i] += sdata[s_i + 8];

sdata[s_i] += sdata[s_i + 4];

sdata[s_i] += sdata[s_i + 2];

sdata[s_i] += sdata[s_i + 1];

}

__global__ void reduce_kernel(const float* data, float* result, int numElements)

{

extern __shared__ float s_data[];

int s_i = threadIdx.x;

int d_i = threadIdx.x + blockIdx.x*2*blockDim.x;

s_data[s_i] = getValue(data, d_i, numElements) + getValue(data, d_i + blockDim.x, numElements);

for (int offset = blockDim.x / 2; offset > 32; offset >>= 1)

{

__syncthreads();

if (s_i < offset)

{

s_data[s_i] += s_data[s_i + offset];

}

}

if (s_i < 32)

{

warpReduce(s_data, s_i);

}

if (s_i == 0)

{

result[blockIdx.x] = s_data[0];

}

}

int main(int argc, char* argv[])

{

int numElements = (argc > 1) ? atoi(argv[1]) : 100000000;

printf("Reducing over %d values.\n", numElements);

float* h_data = (float*)calloc(numElements, sizeof(float));

srand(1214134);

for (int i = 0; i < numElements; i++)

{

h_data[i] = float(rand())/float(RAND_MAX + 1.0);

}

float h_result = 0.0;

float* d_data;

cudaMalloc((void**)&d_data, numElements*sizeof(float));

cudaMemcpy(d_data, h_data, numElements*sizeof(float), cudaMemcpyHostToDevice);

int threadsPerBlock = BLOCK_SIZE;

int numBlocks = numElements/2/BLOCK_SIZE + 1;

float* d_result1;

float* d_result2;

cudaMalloc((void**)&d_result1, numBlocks*sizeof(float));

cudaMalloc((void**)&d_result2, numBlocks*sizeof(float));

// Timing

clock_t start = clock();

// Main loop

reduce_kernel<<<numBlocks, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_data, d_result1, numElements);

for (int numElementsCurrent = numBlocks; numElementsCurrent > 1; )

{

int numBlocksCurrent = numElementsCurrent/2/BLOCK_SIZE + 1;

reduce_kernel<<<numBlocksCurrent, threadsPerBlock, threadsPerBlock*sizeof(float)>>>(d_result1, d_result2, numElementsCurrent);

numElementsCurrent = numBlocksCurrent;

std::swap(d_result1, d_result2);

}

cudaMemcpy(&h_result, d_result1, 1*sizeof(float), cudaMemcpyDeviceToHost);

// Timing

clock_t finish = clock();

printf("The result is: %f\n", h_result);

printf("It took %f seconds\n", (double)(finish - start) / CLOCKS_PER_SEC);

// Release the memory

free(h_data);

cudaFree(d_data);

cudaFree(d_result1);

cudaFree(d_result2);

return 0;

}

Create a separate

__device__function that will handle the last warp reduction. This function should take the shared memory array of values and the index of the thread within the block. Manually unwrap the loop of 6 reductions (\(32 = 2^5\) plus one extra reduction to get the last value). Note that the shared memory array argument should havevolatilequalifier to tell the compiler not to optimize the code.Reduce the number of iteration in the main kernel and call the new warp reduction function for the lase 32 values.`

8. Further improvements

There is more one can do with the current code to get even better performance. Please, see this excelent presentation from Mark Harris (NVidia) for some ideas.