Introduction

Overview

Teaching: 40 min

Exercises: 15 minQuestions

What is Deep Learning?

When does it make sense to use and not use Deep Learning?

When is it successful?

What are the tools involved?

What is the workflow for Deep Learning?

Why did we choose to use Keras in this lesson?

Objectives

Recall the sort of problems for which Deep Learning is a useful tool

List some of the available tools for Deep Learning

Recall the steps of a Deep Learning workflow

Explain why it is important to test the accuracy of Deep Learning system.

Identify the inputs and outputs of a Deep Learning system.

Test that we’ve correctly installed the Keras, Seaborn and Sklearn libraries

What is Deep Learning?

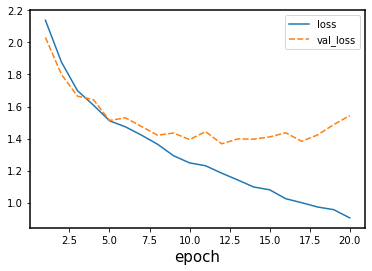

Deep Learning, Machine Learning and Artificial Intelligence

Deep Learning (DL) is just one of many techniques collectively known as machine learning. Machine learning (ML) refers to techniques where a computer can “learn” patterns in data, usually by being shown numerous examples to train it. People often talk about machine learning being a form of artificial intelligence (AI). Definitions of artificial intelligence vary, but usually involve having computers mimic the behaviour of intelligent biological systems. Since the 1950s many works of science fiction have dealt with the idea of an artificial intelligence which matches (or exceeds) human intelligence in all areas. Although there have been great advances in AI and ML research recently we can only come close to human like intelligence in a few specialist areas and are still a long way from a general purpose AI. The image below shows some differences between artificial intelligence, Machine Learning and Deep Learning.

The image above is by Tukijaaliwa, CC BY-SA 4.0, via Wikimedia Commons, original source

The image above is by Tukijaaliwa, CC BY-SA 4.0, via Wikimedia Commons, original source

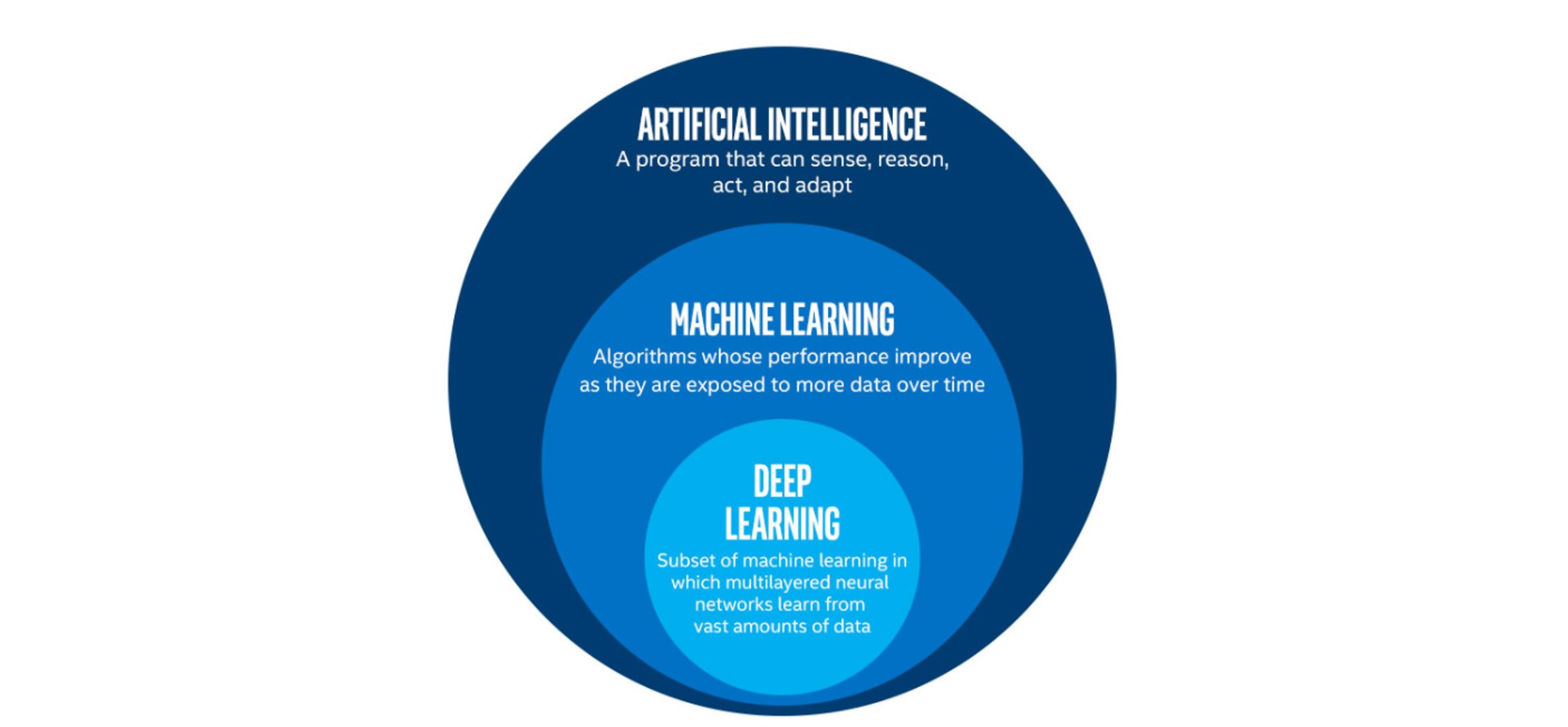

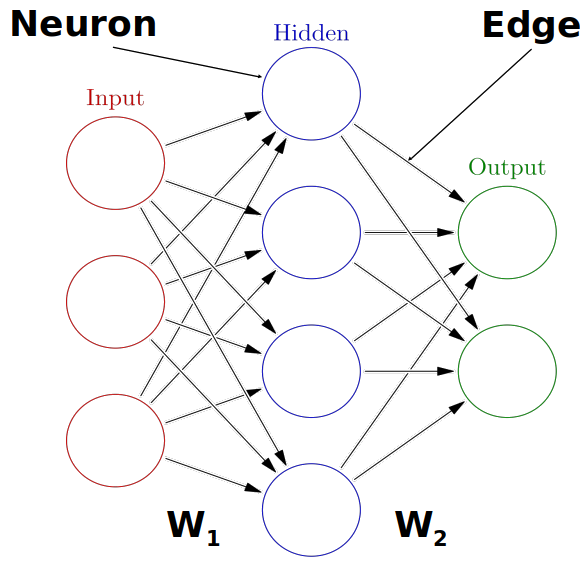

Neural Networks

A neural network is an artificial intelligence technique loosely based on the way neurons in the brain work. A neural network consists of connected computational units called neurons. Each neuron …

- has one or more inputs, e.g. input data expressed as floating point numbers

- most of the time, each neuron conducts 3 main operations:

- take the weighted sum of the inputs

- add an extra constant weight (i.e. a bias term) to this weighted sum

- apply a non-linear function to the output so far (using a predefined activation function)

- return one output value, again a floating point number

Multiple neurons can be joined together by connecting the output of one to the input of another. These connections are associated with weights that determine the ‘strength’ of the connection, the weights are adjusted during training. In this way, the combination of neurons and connections describe a computational graph, an example can be seen in the image below. In most neural networks neurons are aggregated into layers. Signals travel from the input layer to the output layer, possibly through one or more intermediate layers called hidden layers. The image below shows an example of a neural network with three layers, each circle is a neuron, each line is an edge and the arrows indicate the direction data moves in.

The image above is by Glosser.ca, CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0, via Wikimedia Commons, original source

The image above is by Glosser.ca, CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0, via Wikimedia Commons, original source

Neural networks aren’t a new technique, they have been around since the late 1940s. But until around 2010 neural networks tended to be quite small, consisting of only 10s or perhaps 100s of neurons. This limited them to only solving quite basic problems. Around 2010 improvements in computing power and the algorithms for training the networks made much larger and more powerful networks practical. These are known as deep neural networks or Deep Learning.

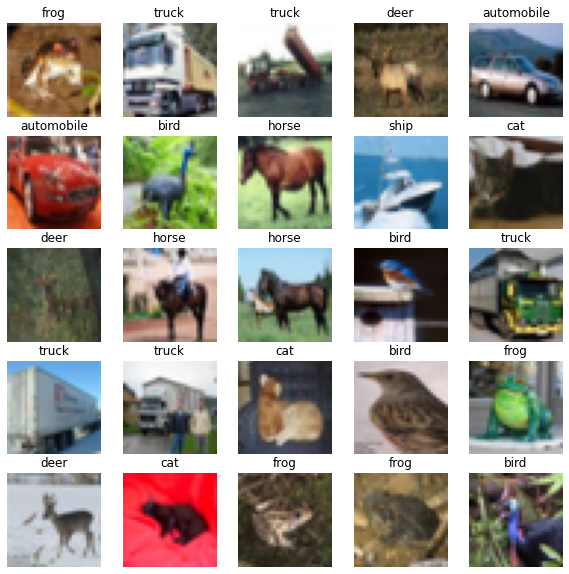

Deep Learning requires extensive training using example data which shows the network what output it should produce for a given input. One common application of Deep Learning is classifying images. Here the network will be trained by being “shown” a series of images and told what they contain. Once the network is trained it should be able to take another image and correctly classify its contents. But we are not restricted to just using images, any kind of data can be learned by a Deep Learning neural network. This makes them able to appear to learn a set of complex rules only by being shown what the inputs and outputs of those rules are instead of being taught the actual rules. Using these approaches Deep Learning networks have been taught to play video games and even drive cars. The data on which networks are trained usually has to be quite extensive, typically including thousands of examples. For this reason they are not suited to all applications and should be considered just one of many machine learning techniques which are available.

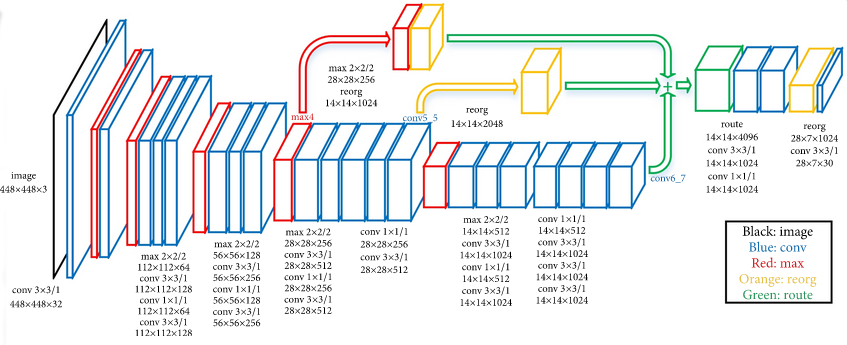

While traditional “shallow” networks might have had between three and five layers, deep networks often have tens or even hundreds of layers. This leads to them having millions of individual weights. The image below shows a diagram of all the layers (there are too many neurons to draw them all) on a Deep Learning network designed to detect pedestrians in images. The input (left most) layer of the network is an image and the final (right most) layer of the network outputs a zero or one to determine if the input data belongs to the class of data we’re interested in. This image is from the paper “An Efficient Pedestrian Detection Method Based on YOLOv2” by Zhongmin Liu, Zhicai Chen, Zhanming Li, and Wenjin Hu published in Mathematical Problems in Engineering, Volume 2018

What sort of problems can Deep Learning solve?

- Pattern/object recognition

- Segmenting images (or any data)

- Translating between one set of data and another, for example natural language translation.

- Generating new data that looks similar to the training data, often used to create synthetic datasets, art or even “deepfake” videos.

- This can also be used to give the illusion of enhancing data, for example making images look sharper, video look smoother or adding colour to black and white images. But beware of this, it is not an accurate recreation of the original data, but a recreation based on something statistically similar, effectively a digital imagination of what that data could look like.

Examples of Deep Learning in Research

Here are just a few examples of how Deep Learning has been applied to some research problems. Note: some of these articles might be behind paywalls.

- Detecting COVID-19 in chest X-ray images

- Forecasting building energy load

- Protein function prediction

- Simulating Chemical Processes

- Help to restore ancient murals

What sort of problems can’t Deep Learning solve?

- Any case where only a small amount of training data is available.

- Tasks requiring an explanation of how the answer was arrived at.

- Classifying things which are nothing like their training data.

What sort of problems can Deep Learning solve, but shouldn’t be used for?

Deep Learning needs a lot of computational power, for this reason it often relies on specialised hardware like graphical processing units (GPUs). Many computational problems can be solved using less intensive techniques, but could still technically be solved with Deep Learning.

The following could technically be achieved using Deep Learning, but it would probably be a very wasteful way to do it:

- Logic operations, such as computing totals, averages, ranges etc. (see this example applying Deep Learning to solve the “FizzBuzz” problem often used for programming interviews)

- Modelling well defined systems, where the equations governing them are known and understood.

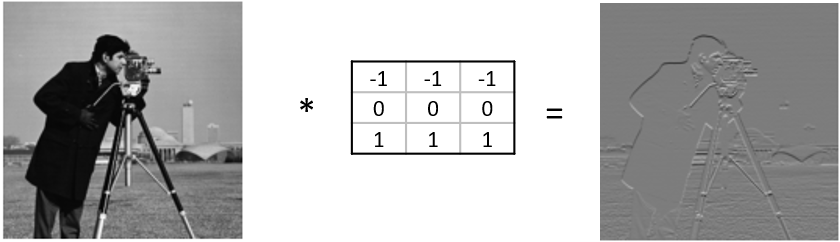

- Basic computer vision tasks such as edge detection, decreasing colour depth or blurring an image.

Deep Learning Problems Exercise

Which of the following would you apply Deep Learning to?

- Recognising whether or not a picture contains a bird.

- Calculating the median and interquartile range of a dataset.

- Identifying MRI images of a rare disease when only one or two example images available for training.

- Identifying people in pictures after being trained only on cats and dogs.

- Translating English into French.

Solution

- and 5 are the sort of tasks often solved with Deep Learning.

- is technically possible but solving this with Deep Learning would be extremely wasteful, you could do the same with much less computing power using traditional techniques.

- will probably fail because there’s not enough training data.

- will fail because the Deep Learning system only knows what cats and dogs look like, it might accidentally classify the people as cats or dogs.

How much data do you need for Deep Learning?

The rise of Deep Learning is partially due to the increased availability of very large datasets. But how much data do you actually need to train a Deep Learning model? Unfortunately, this question is not easy to answer. It depends, among other things, on the complexity of the task (which you often don’t know beforehand), the quality of the available dataset and the complexity of the network. For complex tasks with large neural networks, we often see that adding more data continues to improve performance. However, this is also not a generic truth: if the data you add is too similar to the data you already have, it will not give much new information to the neural network.

In case you have too little data available to train a complex network from scratch, it is sometimes possible to use a pretrained network that was trained on a similar problem. Another trick is data augmentation, where you expand the dataset with artificial data points that could be real. An example of this is mirroring images when trying to classify cats and dogs. An horizontally mirrored animal retains the label, but exposes a different view.

Deep Learning workflow

To apply Deep Learning to a problem there are several steps we need to go through:

1. Formulate/ Outline the problem

Firstly we must decide what it is we want our Deep Learning system to do. Is it going to classify some data into one of a few categories? For example if we have an image of some hand written characters, the neural network could classify which character it is being shown. Or is it going to perform a prediction? For example trying to predict what the price of something will be tomorrow given some historical data on pricing and current trends.

2. Identify inputs and outputs

Next we need to identify what the inputs and outputs of the neural network will be. This might require looking at our data and deciding what features of the data we can use as inputs. If the data is images then the inputs could be the individual pixels of the images.

For the outputs we’ll need to look at what we want to identify from the data. If we are performing a classification problem then typically we will have one output for each potential class.

3. Prepare data

Many datasets aren’t ready for immediate use in a neural network and will require some preparation. Neural networks can only really deal with numerical data, so any non-numerical data (for example words) will have to be somehow converted to numerical data.

Next we’ll need to divide the data into multiple sets. One of these will be used by the training process and we’ll call it the training set. Another will be used to evaluate the accuracy of the training and we’ll call that one the test set. Sometimes we’ll also use a 3rd set known as a validation set to check our results after training is complete.

4. Choose a pre-trained model or build a new architecture from scratch

Often we can use an existing neural network instead of designing one from scratch. Training a network can take a lot of time and computational resources. There are a number of well publicised networks which have been shown to perform well at certain tasks, if you know of one which already does a similar task well then it makes sense to use one of these.

If instead we decide we do want to design our own network then we need to think about how many input neurons it will have, how many hidden layers and how many outputs, what types of layers we use (we’ll explore the different types later on). This will probably need some experimentation and we might have to try tweaking the network design a few times before we see acceptable results.

5. Choose a loss function and optimizer

The loss function tells the training algorithm how far away the predicted value was from the true value. We’ll look at choosing a loss function in more detail later on.

The optimizer is responsible for taking the output of the loss function and then applying some changes to the weights within the network. It is through this process that the “learning” (adjustment of the weights) is achieved.

6. Train the model

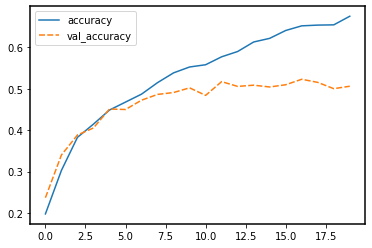

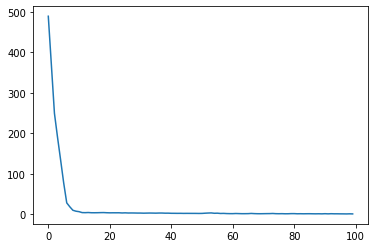

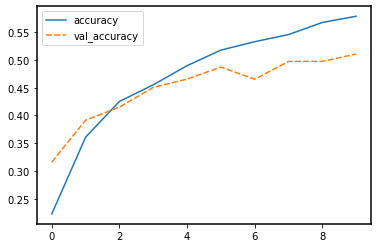

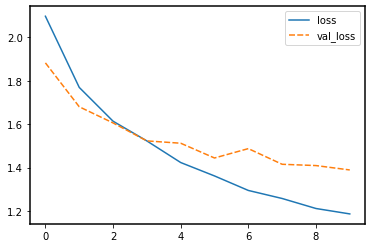

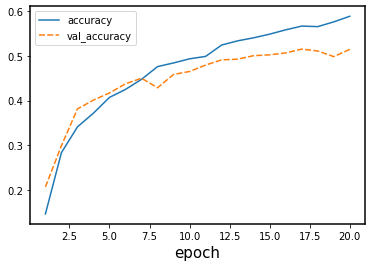

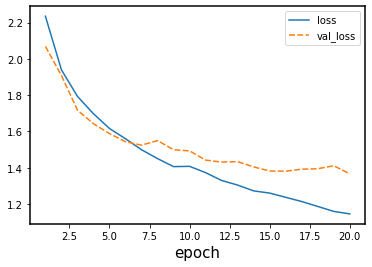

We can now go ahead and start training our neural network. We’ll probably keep doing this for a given number of iterations through our training dataset (referred to as epochs) or until the loss function gives a value under a certain threshold. The graph below show the loss against the number of epochs, generally the loss will go down with each epoch, but occasionally it will see a small rise.

7. Perform a Prediction/Classification

After training the network we can use it to perform predictions. This is the mode you would use the network in after you have fully trained it to a satisfactory performance. Doing predictions on a special hold-out set is used in the next step to measure the performance of the network.

8. Measure Performance

Once we think the network is performing well we want to measure its performance. To do this we can use some additional data that wasn’t part of the training, this is known as a validation set. There are many different methods available for doing this and which one is best depends on the type of task we are attempting. These metrics are often published as an indication of how well our network performs.

9. Tune Hyperparameters

Hyperparameters are all the parameters set by the person configuring the machine learning instead of those learned by the algorithm itself. The Hyperparameters include the number of epochs or the parameters for the optimizer. It might be necessary to adjust these and re-run the training many times before we are happy with the result.

10. Share Model

Now that we have a trained network that performs at a level we are happy with we can go and use it on real data to perform a prediction. At this point we might want to consider publishing a file with both the architecture of our network and the weights which it has learned (assuming we didn’t use a pre-trained network). This will allow others to use it as as pre-trained network for their own purposes and for them to (mostly) reproduce our result.

Deep Learning workflow exercise

Think about a problem you’d like to use Deep Learning to solve.

- What do you want a Deep Learning system to be able to tell you?

- What data inputs and outputs will you have?

- Do you think you’ll need to train the network or will a pre-trained network be suitable?

- What data do you have to train with? What preparation will your data need? Consider both the data you are going to predict/classify from and the data you’ll use to train the network.

Discuss your answers with the group or the person next to you.

Deep Learning Libraries

There are many software libraries available for Deep Learning including:

TensorFlow

TensorFlow was developed by Google and is one of the older Deep Learning libraries, ported across many languages since it was first released to the public in 2015. It is very versatile and capable of much more than Deep Learning but as a result it often takes a lot more lines of code to write Deep Learning operations in TensorFlow than in other libraries. It offers (almost) seamless integration with GPU accelerators and Google’s own TPU (Tensor Processing Unit) chips that are built specially for machine learning.

PyTorch

PyTorch was developed by Facebook in 2016 and is a popular choice for Deep Learning applications. It was developed for Python from the start and feels a lot more “pythonic” than TensorFlow. Like TensorFlow it was designed to do more than just Deep Learning and offers some very low level interfaces. Like TensorFlow it’s also very easy to integrate PyTorch with a GPU. In many benchmarks it out performs the other libraries.

Keras

Keras is designed to be easy to use and usually requires fewer lines of code than other libraries. We have chosen it for this workshop for that reason. Keras can actually work on top of TensorFlow (and several other libraries), hiding away the complexities of TensorFlow while still allowing you to make use of their features.

The performance of Keras is sometimes not as good as other libraries and if you are going to move on to create very large networks using very large datasets then you might want to consider one of the other libraries. But for many applications the performance difference will not be enough to worry about and the time you’ll save with simpler code will exceed what you’ll save by having the code run a little faster.

Keras also benefits from a very good set of online documentation and a large user community. You will find that most of the concepts from Keras translate very well across to the other libraries if you wish to learn them at a later date.

Installing Keras and other dependencies

Follow the instructions in the setup document to install Keras, Seaborn and Sklearn.

Testing Keras Installation

Lets check you have a suitable version of Keras installed. Open up a new Jupyter notebook or interactive python console and run the following commands:

from tensorflow import keras print(keras.__version__)Solution

You should get a version number reported. At the time of writing 2.4.0 is the latest version.

2.4.0

Testing Seaborn Installation

Lets check you have a suitable version of seaborn installed. In your Jupyter notebook or interactive python console run the following commands:

import seaborn print(seaborn.__version__)Solution

You should get a version number reported. At the time of writing 0.11.1 is the latest version.

0.11.1

Testing Sklearn Installation

Lets check you have a suitable version of sklearn installed. In your Jupyter notebook or interactive python console run the following commands:

import sklearn print(sklearn.__version__)Solution

You should get a version number reported. At the time of writing 0.24.1 is the latest version.

0.24.1

Key Points

Machine learning is the process where computers learn to recognise patterns of data.

Artificial neural networks are a machine learning technique based on a model inspired by groups of neurons in the brain.

Artificial neural networks can be trained on example data.

Deep Learning is a machine learning technique based on using many artificial neurons arranged in layers.

Deep Learning is well suited to classification and prediction problems such as image recognition.

To use Deep Learning effectively we need to go through a workflow of: defining the problem, identifying inputs and outputs, preparing data, choosing the type of network, choosing a loss function, training the model, tuning Hyperparameters, measuring performance before we can classify data.

Keras is a Deep Learning library that is easier to use than many of the alternatives such as TensorFlow and PyTorch.

Classification by a Neural Network using Keras

Overview

Teaching: 30-60 min

Exercises: 40-45 minQuestions

What is a neural network?

How do I compose a Neural Network using Keras?

How do I train this network on a dataset

How do I get insight into learning process

How do I measure the performance of the network

Objectives

Use the deep learning workflow to structure the notebook

Explore the dataset using pandas and seaborn

Use one-hot encoding to prepare data for classification in Keras

Describe a fully connected layer

Implement a fully connected layer with Keras

Use Keras to train a small fully connected network on prepared data

Plot the loss curve of the training process

Use a confusion matrix to measure the trained networks’ performance on a test set

Introduction

In this episode we will learn how to create and train a Neural Network using Keras to solve a simple classification task.

The goal of this episode is to quickly get your hands dirty in actually defining and training a neural network, without going into depth of how neural networks work on a technical or mathematical level. We want you to go through the most commonly used deep learning workflow that was covered in the introduction. As a reminder below are the steps of the deep learning workflow:

- Formulate / Outline the problem

- Identify inputs and outputs

- Prepare data

- Choose a pretrained model or start building architecture from scratch

- Choose a loss function and optimizer

- Train the model

- Perform a Prediction/Classification

- Measure performance

- Tune hyperparameters

- Save model

In this episode we will focus on a minimal example for each of these steps, later episodes will build on this knowledge to go into greater depth for some or all of these steps.

GPU usage

For this lesson having a GPU (graphics card) available is not needed. We specifically use very small toy problems so that you do not need one. However, Keras will use your GPU automatically when it is available. Using a GPU becomes necessary when tackling larger datasets or complex problems which require a more complex Neural Network.

1. Formulate / Outline the problem: Penguin classification

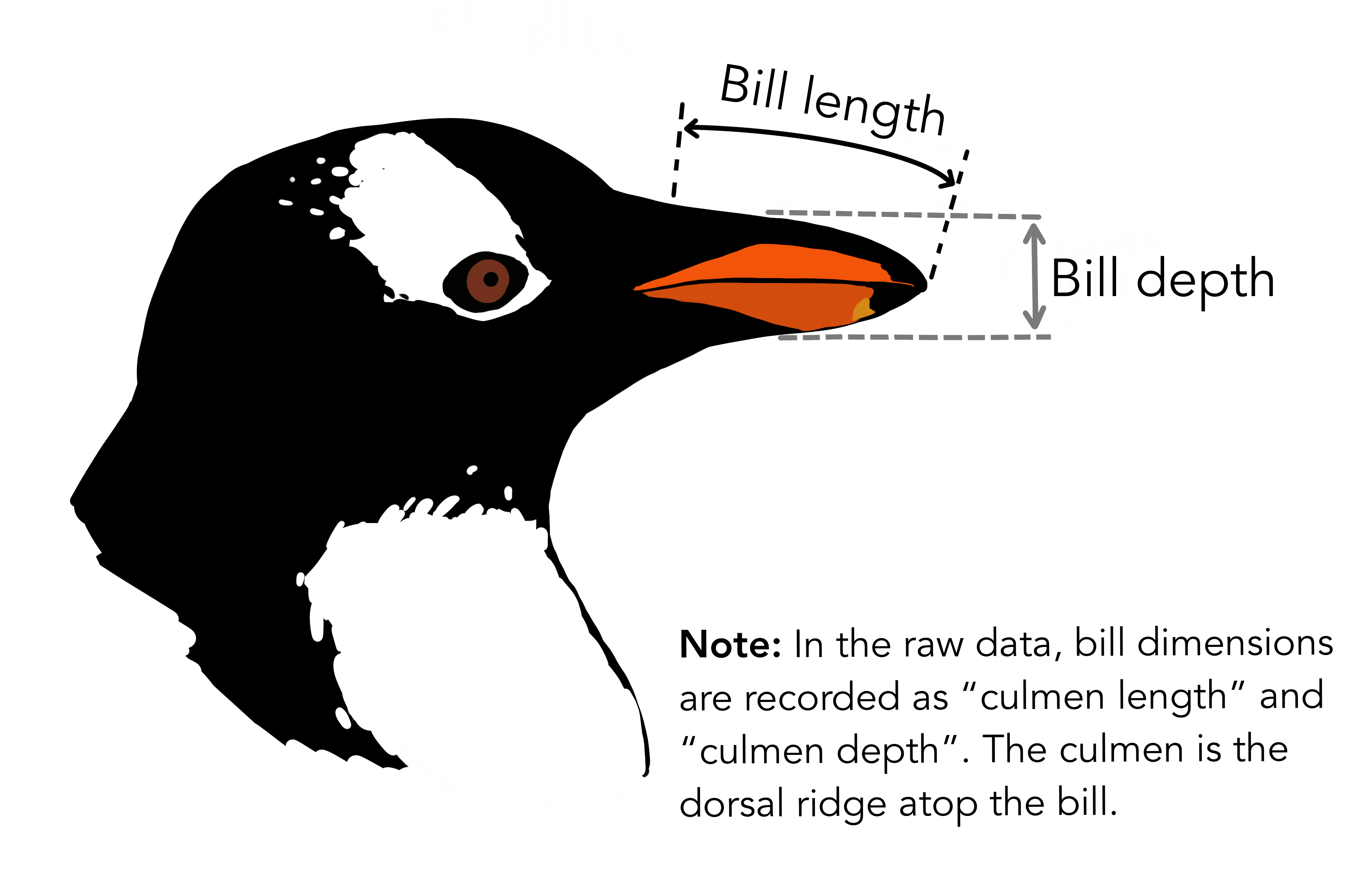

In this episode we will be using the penguin dataset, this is a dataset that was published in 2020 by Allison Horst and contains data on three different species of the penguins.

We will use the penguin dataset to train a neural network which can classify which species a penguin belongs to, based on their physical characteristics.

Goal

The goal is to predict a penguins’ species using the attributes available in this dataset.

The palmerpenguins data contains size measurements for three penguin species observed on three islands in the Palmer Archipelago, Antarctica.

The physical attributes measured are flipper length, beak length, beak width, body mass, and sex.

Artwork by @allison_horst

Artwork by @allison_horst

Artwork by @allison_horst

Artwork by @allison_horst

These data were collected from 2007 - 2009 by Dr. Kristen Gorman with the Palmer Station Long Term Ecological Research Program, part of the US Long Term Ecological Research Network. The data were imported directly from the Environmental Data Initiative (EDI) Data Portal, and are available for use by CC0 license (“No Rights Reserved”) in accordance with the Palmer Station Data Policy.

2. Identify inputs and outputs

To identify the inputs and outputs that we will use to design the neural network we need to familiarize ourselves with the dataset. This step is sometimes also called data exploration.

We will start by importing the Seaborn library that will help us get the dataset and visualize it. Seaborn is a powerful library with many visualizations. Keep in mind it requires the data to be in a pandas dataframe, luckily the datasets available in seaborn are already in a pandas dataframe.

import seaborn as sns

We can load the penguin dataset using

penguins = sns.load_dataset('penguins')

This will give you a pandas dataframe which contains the penguin data.

Penguin Dataset

Inspect the penguins dataset.

- What are the different features called in the dataframe?

- Are the target classes of the dataset stored as numbers or strings?

- How many samples does this dataset have?

Solution

1. Using the pandas

headfunction you can see the names of the features. Using thedescribefunction we can also see some statistics for the numeric columnspenguins.head()

species island bill_length_mm bill_depth_mm flipper_length_mm body_mass_g sex 0 Adelie Torgersen 39.1 18.7 181.0 3750.0 Male 1 Adelie Torgersen 39.5 17.4 186.0 3800.0 Female 2 Adelie Torgersen 40.3 18.0 195.0 3250.0 Female 3 Adelie Torgersen NaN NaN NaN NaN NaN 4 Adelie Torgersen 36.7 19.3 193.0 3450.0 Female penguins.describe()

bill_length_mm bill_depth_mm flipper_length_mm body_mass_g count 342.000000 342.000000 342.000000 342.000000 mean 43.921930 17.151170 200.915205 4201.754386 std 5.459584 1.974793 14.061714 801.954536 min 32.100000 13.100000 172.000000 2700.000000 25% 39.225000 15.600000 190.000000 3550.000000 50% 44.450000 17.300000 197.000000 4050.000000 75% 48.500000 18.700000 213.000000 4750.000000 max 59.600000 21.500000 231.000000 6300.000000 2. We can get the unique values in the

speciescolumn using theuniquefunction of pandas. It shows the target class is stored as a string and has 3 unique values. This type of column is usually called a ‘categorical’ column.penguins["species"].unique()['Adelie', 'Chinstrap', 'Gentoo']3. Using

describefunction on the species column shows there are 344 samples unique speciespenguins["species"].describe()count 344 unique 3 top Adelie freq 152 Name: species, dtype: object

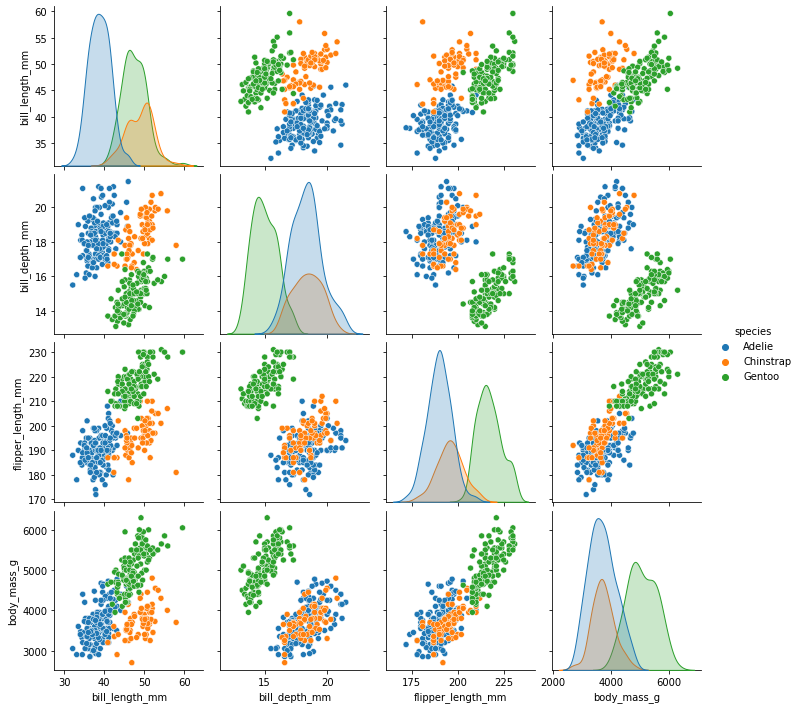

Visualization

Looking at numbers like this usually does not give a very good intuition about the data we are working with, so let us create a visualization.

Pair Plot

One nice visualization for datasets with relatively few attributes is the Pair Plot.

This can be created using sns.pairplot(...). It shows a scatterplot of each attribute plotted against each of the other attributes.

By using the hue='species' setting for the pairplot the graphs on the diagonal are layered kernel density estimate plots for the different values of the species column.

sns.pairplot(penguins, hue="species")

Pairplot

Take a look at the pairplot we created. Consider the following questions:

- Is there any class that is easily distinguishable from the others?

- Which combination of attributes shows the best separation for all 3 class labels at once?

Solution

The plots show that the green class, Gentoo is somewhat more easily distinguishable from the other two. The other two seem to be most easily separated by a combination of bill length and bill depth.

Input and Output Selection

Now that we have familiarized ourselves with the dataset we can select the data attributes to use as input for the neural network and the target that we want to predict.

In the rest of this episode we will use the bill_length_mm, bill_depth_mm, flipper_length_mm, body_mass_g attributes.

The target for the classification task will be the species.

Data Exploration

Exploring the data is an important step to familiarize yourself with the problem and to help you determine the relevant inputs and outputs.

3. Prepare data

The input data and target data are not yet in a format that is suitable to use for training a neural network.

Change types if needed

First, the species column is our categorical target, however pandas still sees it as the

generic type Object. We can convert this to the pandas categorical type:

penguins['species'] = penguins['species'].astype('category')

This will make later interaction with this column a little easier.

Clean missing values

During the exploration phase you may have noticed that some rows in the dataset have missing (NaN)

values, leaving such values in the input data will ruin the training, so we need to deal with them.

There are many ways to deal with missing values, but for now we will just remove the offending rows by adding a call to dropna():

# Drop two columns and the rows that have NaN values in them

penguins_filtered = penguins.drop(columns=['island', 'sex']).dropna()

# Extract columns corresponding to features

penguins_features = penguins_filtered.drop(columns=['species'])

Prepare target data for training

Second, the target data is also in a format that cannot be used in training. A neural network can only take numerical inputs and outputs, and learns by calculating how “far away” the species predicted by the neural network is from the true species. When the target is a string category column as we have here it is very difficult to determine this “distance” or error. Therefore we will transform this column into a more suitable format. Again there are many ways to do this, however we will be using the one-hot encoding. This encoding creates multiple columns, as many as there are unique values, and puts a 1 in the column with the corresponding correct class, and 0’s in the other columns. For instance, for a penguin of the Adelie species the one-hot encoding would be 1 0 0

Fortunately pandas is able to generate this encoding for us.

import pandas as pd

target = pd.get_dummies(penguins_filtered['species'])

target.head() # print out the top 5 to see what it looks like.

Split data into training and test set

Finally, we will split the dataset into a training set and a test set. As the names imply we will use the training set to train the neural network, while the test set is kept separate. We will use the test set to assess the performance of the trained neural network on unseen samples. In many cases a validation set is also kept separate from the training and test sets (i.e. the dataset is split into 3 parts). This validation set is then used to select the values of the parameters of the neural network and the training methods. For this episode we will keep it at just a training and test set however.

To split the cleaned dataset into a training and test set we will use a very convenient

function from sklearn called train_test_split.

This function takes a number of parameters:

- The first two are the dataset and the corresponding targets.

- Next is the named parameter

test_sizethis is the fraction of the dataset that is used for testing, in this case0.2means 20% of the data will be used for testing. random_statecontrols the shuffling of the dataset, setting this value will reproduce the same results (assuming you give the same integer) every time it is called.shufflewhich can be eitherTrueorFalse, it controls whether the order of the rows of the dataset is shuffled before splitting. It defaults toTrue.stratifyis a more advanced parameter that controls how the split is done. By setting it totargetthe train and test sets the function will return will have roughly the same proportions (with regards to the number of penguins of a certain species) as the dataset.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(penguins_features, target,test_size=0.2, random_state=0, shuffle=True, stratify=target)

Training and Test sets

Take a look at the training and test set we created.

- How many samples do the training and test sets have?

- Are the classes in the training set well balanced?

Solution

Using

y_train.shapeandy_test.shapewe can see the training set has 273 samples and y_test has 69 samples.We can check the balance of classes by counting the number of ones for each of the columns in the one-hot-encoded target, which shows the training set has 121 Adelie, 98 Gentoo and 54 Chinstrap samples.

y_train.sum()Adelie 121 Chinstrap 54 Gentoo 98 dtype: int64The dataset is not perfectly balanced, but it is not orders of magnitude out of balance either. So we will leave it as it is.

Keras for Neural Networks

For this lesson we will be using Keras to define and train our neural network

models.

Keras is a machine learning framework with ease of use as one of its main features.

It is part of the tensorflow python package and can be imported using from tensorflow import keras.

Keras includes functions, classes and definitions to define deep learning models, cost functions and optimizers (optimizers are used to train a model).

Before we move on to the next section of the workflow we need to make sure we have Keras imported. We do this as follows:

from tensorflow import keras

For this class it is useful if everyone gets the same results from their training. Keras uses a random number generator at certain points during its execution. Therefore we will need to set two random seeds, one for numpy and one for tensorflow:

from numpy.random import seed

seed(1)

from tensorflow.random import set_seed

set_seed(2)

4. Choose a pretrained model or start building architecture from scratch

Now we will build a neural network from scratch, and although this sounds like a daunting task, with Keras it is actually surprisingly straightforward.

With Keras you compose a neural network by creating layers and linking them

together. For now we will only use one type of layer called a fully connected

or Dense layer. In Keras this is defined by the keras.layers.Dense class.

A dense layer has a number of neurons, which is a parameter you can choose when you create the layer. When connecting the layer to its input and output layers every neuron in the dense layer gets an edge (i.e. connection) to all of the input neurons and all of the output neurons. The hidden layer in the image in the introduction of this episode is a Dense layer.

The input in Keras also gets special treatment, Keras automatically calculates the number of inputs

and outputs a layer needs and therefore how many edges need to be created.

This means we need to let Keras now how big our input is going to be.

We do this by instantiating a keras.Input class and tell it how big our input is.

inputs = keras.Input(shape=X_train.shape[1])

We store a reference to this input class in a variable so we can pass it to the creation of our hidden layer. Creating the hidden layer can then be done as follows:

hidden_layer = keras.layers.Dense(10, activation="relu")(inputs)

The instantiation here has 2 parameters and a seemingly strange combination of parentheses, so

let’s take a closer look.

The first parameter 10 is the number of neurons we want in this layer, this is one of the

hyperparameters of our system and needs to be chosen carefully. We will get back to this in the section

on hyperparameter tuning.

The second parameter is the activation function to use, here we choose relu which is 0

for inputs that are 0 and below and the identity function (returning the same value)

for inputs above 0.

This is a commonly used activation functions in deep neural networks that is proven to work well.

Next we see an extra set of parenthenses with inputs in them, this means that after creating an

instance of the Dense layer we call it as if it was a function.

This tells the Dense layer to connect the layer passed as a parameter, in this case the inputs.

Finally we store a reference so we can pass it to the output layer in a minute.

Now we create another layer that will be our output layer. Again we use a Dense layer and so the call is very similar to the previous one.

output_layer = keras.layers.Dense(3, activation="softmax")(hidden_layer)

Because we chose the one-hot encoding, we use 3 neurons for the output layer.

The softmax activation ensures that the three output neurons produce values in the range (0, 1) and they sum to 1. We can interpret this as a kind of ‘probability’ that the sample belongs to a certain species.

Now that we have defined the layers of our neural network we can combine them into a Keras model which facilitates training the network.

model = keras.Model(inputs=inputs, outputs=output_layer)

model.summary()

The model summary here can show you some information about the neural network we have defined.

Create the neural network

With the code snippets above, we defined a Keras model with 1 hidden layer with 10 neurons and an output layer with 3 neurons.

- How many parameters does the resulting model have?

- What happens to the number of parameters if we increase or decrease the number of neurons in the hidden layer?

Solution

inputs = keras.Input(shape=X_train.shape[1]) hidden_layer = keras.layers.Dense(10, activation="relu")(inputs) output_layer = keras.layers.Dense(3, activation="softmax")(hidden_layer) model = keras.Model(inputs=inputs, outputs=output_layer) model.summary()Model: "functional_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 4)] 0 _________________________________________________________________ dense (Dense) (None, 10) 50 _________________________________________________________________ dense_1 (Dense) (None, 3) 33 ================================================================= Total params: 83 Trainable params: 83 Non-trainable params: 0 _________________________________________________________________The model has 83 trainable parameters. If you increase the number of neurons in the hidden layer the number of trainable parameters in both the hidden and output layer increases or decreases accordingly of neurons.

How to choose an architecture?

Even for this small neural network, we had to make a choice on the number of hidden neurons. Other choices to be made are the number of layers and type of layers (as we will see later). You might wonder how you should make these architectural choices. Unfortunately, there are no clear rules to follow here, and it often boils down to a lot of trial and error. However, it is recommended to look what others have done with similar datasets and problems. Another best practice is to start with a relatively simple architecture. Once it is running, start to add layers and tweak the network to see if performance increases.

If your data and problem is very similar to what others have done, you can often use a pretrained network. Even if your problem is different, but the data type is common (for example images), you can use a pretrained network and finetune it for your problem. A large number of openly available pretrained networks can be found in the Model Zoo, PyTorch Hub or TensorFlow Hub.

5. Choose a loss function and optimizer

We have now designed a neural network that in theory we should be able to train to classify Penguins. However, we first need to select an appropriate loss function that we will use during training. This loss function tells the training algorithm how wrong, or how ‘far away’ from the true value the predicted value is.

For the one-hot encoding that we selected before a fitting loss function is the Categorical Crossentropy loss.

In Keras this is implemented in the keras.losses.CategoricalCrossentropy class.

This loss function works well in combination with the softmax activation function

we chose earlier.

The Categorical Crossentropy works by comparing the probabilities that the

neural network predicts with ‘true’ probabilities that we generated using the one-hot encoding.

This is a measure for how close the distribution of the three neural network outputs corresponds to the distribution of the three values in the one-hot encoding.

It is lower if the distributions are more similar.

For more information on the available loss functions in Keras you can check the documentation.

Next we need to choose which optimizer to use and, if this optimizer has parameters, what values to use for those. Furthermore, we need to specify how many times to show the training samples to the optimizer.

Once more, Keras gives us plenty of choices all of which have their own pros and cons, but for now let us go with the widely used Adam optimizer. Adam has a number of parameters, but the default values work well for most problems. So we will use it with its default parameters.

Combining this with the loss function we decided on earlier we can now compile the

model using model.compile.

Compiling the model prepares it to start the training.

model.compile(optimizer='adam', loss=keras.losses.CategoricalCrossentropy())

6. Train model

We are now ready to train the model.

Training the model is done using the fit method, it takes the input data and

target data as inputs and it has several other parameters for certain options

of the training.

Here we only set a different number of epochs.

One training epoch means that every sample in the training data has been shown

to the neural network and used to update its parameters.

history = model.fit(X_train, y_train, epochs=100)

The fit method returns a history object that has a history attribute with the training loss and potentially other metrics per training epoch. It can be very insightful to plot the training loss to see how the training progresses. Using seaborn we can do this as follow:

sns.lineplot(x=history.epoch, y=history.history['loss'])

This plot can be used to identify whether the training is well configured or whether there are problems that need to be addressed.

The Training Curve

Looking at the training curve we have just made.

- How does the training progress?

- Does the training loss increase or decrease?

- Does it change fast or slowly?

- Is the graph look very jittery?

- Do you think the resulting trained network will work well on the test set?

Solution

- The loss curve should drop quite quickly in a smooth line with little jitter

- The results of the training give very little information on its performance on a test set. You should be careful not to use it as an indication of a well trained network.

7. Perform a Prediction/Classification

Now that we have a trained neural network, we can use it to predict new samples

of penguin using the predict function.

We will use the neural network to predict the species of the test set

using the predict function.

We will be using this prediction in the next step to measure the performance of our

trained network.

This will return a numpy matrix, which we convert

to a pandas dataframe to easily see the labels.

y_pred = model.predict(X_test)

prediction = pd.DataFrame(y_pred, columns=target.columns)

prediction

Output

0 0.304484 0.192893 0.502623 1 0.527107 0.095888 0.377005 2 0.373989 0.195604 0.430406 3 0.493643 0.154104 0.352253 4 0.309051 0.308646 0.382303 … … … … 64 0.406074 0.191430 0.402496 65 0.645621 0.077174 0.277204 66 0.356284 0.185958 0.457758 67 0.393868 0.159575 0.446557 68 0.509837 0.144219 0.345943

Remember that the output of the network uses the softmax activation function and has three

outputs, one for each species. This dataframe shows this nicely.

We now need to transform this output to one penguin species per sample.

We can do this by looking for the index of highest valued output and converting that

to the corresponding species.

Pandas dataframes have the idxmax function, which will do exactly that.

predicted_species = prediction.idxmax(axis="columns")

predicted_species

Output

0 Gentoo 1 Adelie 2 Gentoo 3 Adelie 4 Gentoo ... 64 Adelie 65 Adelie 66 Gentoo 67 Gentoo 68 Adelie Length: 69, dtype: object

8. Measuring Performance

Now that we have a trained neural network it is important to assess how well it performs. We want to know how well it will perform in a realistic prediction scenario, measuring performance will also come back when tuning the hyperparameters.

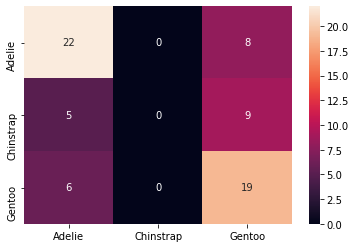

We have created a test set during the data preparation stage which we will use now to create a confusion matrix.

Confusion matrix

With the predicted species we can now create a confusion matrix and display it

using seaborn.

To create a confusion matrix we will use another convenient function from sklearn

called confusion_matrix.

This function takes as a first parameter the true labels of the test set.

We can get these by using the idxmax method on the y_test dataframe.

The second parameter is the predicted labels which we did above.

from sklearn.metrics import confusion_matrix

true_species = y_test.idxmax(axis="columns")

matrix = confusion_matrix(true_species, predicted_species)

print(matrix)

[[22 0 8]

[ 5 0 9]

[ 6 0 19]]

Unfortunately, this matrix is kinda hard to read. Its not clear which column and which row corresponds to which species. So let’s convert it to a pandas dataframe with its index and columns set to the species as follows:

# Convert to a pandas dataframe

confusion_df = pd.DataFrame(matrix, index=y_test.columns.values, columns=y_test.columns.values)

# Set the names of the x and y axis, this helps with the readability of the heatmap.

confusion_df.index.name = 'True Label'

confusion_df.columns.name = 'Predicted Label'

We can then use the heatmap function from seaborn to create a nice visualization of

the confusion matrix.

The annot=True parameter here will put the numbers from the confusion matrix in

the heatmap.

sns.heatmap(confusion_df, annot=True)

Confusion Matrix

Measure the performance of the neural network you trained and visualize a confusion matrix.

- Did the neural network perform well on the test set?

- Did you expect this from the training loss you saw?

- What could we do to improve the performance?

Solution

The confusion matrix shows that the predictions for Adelie and Gentoo are decent, but could be improved. However, Chinstrap is not predicted ever.

The training loss was very low, so from that perspective this may be surprising. But this illustrates very well why a test set is important when training neural networks.

We can try many things to improve the performance from here. One of the first things we can try is to balance the dataset better. Other options include: changing the network architecture or changing the training parameters

9. Tune hyperparameters

As we discussed before the design and training of a neural network comes with many hyper parameter choices. We will go into more depth of these hyperparameters in later episodes. For now it is important to realize that the parameters we chose were somewhat arbitrary and more careful consideration needs to be taken to pick hyperparameter values.

10. Share Model

It is very useful to be able to use the trained neural network at a later

stage without having to retrain it.

This can be done by using the save method of the model.

It takes a string as a parameter which is the path of a directory where the model is stored.

model.save('my_first_model')

This saved model can be loaded again by using the load_model method as follows:

pretrained_model = keras.models.load_model('my_first_model')

This loaded model can be used as before to predict.

# use the pretrained model here

y_pretrained_pred = pretrained_model.predict(X_test)

pretrained_prediction = pd.DataFrame(y_pretrained_pred, columns=target.columns.values)

# idxmax will select the column for each row with the highest value

pretrained_predicted_species = pretrained_prediction.idxmax(axis="columns")

print(pretrained_predicted_species)

Output

0 Adelie 1 Gentoo 2 Adelie 3 Gentoo 4 Gentoo ... 64 Gentoo 65 Gentoo 66 Adelie 67 Adelie 68 Gentoo Length: 69, dtype: object

Key Points

The deep learning workflow is a useful tool to structure your approach, it helps to make sure you do not forget any important steps.

Exploring the data is an important step to familiarize yourself with the problem and to help you determine the relavent inputs and outputs.

One-hot encoding is a preprocessing step to prepare labels for classification in Keras.

A fully connected layer is a layer which has connections to all neurons in the previous and subsequent layers.

keras.layers.Dense is an implementation of a fully connected layer, you can set the number of neurons in the layer and the activation function used.

To train a neural network with Keras we need to first define the network using layers and the Model class. Then we can train it using the model.fit function.

Plotting the loss curve can be used to identify and troubleshoot the training process.

The loss curve on the training set does not provide any information on how well a network performs in a real setting.

Creating a confusion matrix with results from a test set gives better insight into the network’s performance.

Monitor the training process

Overview

Teaching: 135 min

Exercises: 80 minQuestions

How do I set the training goal?

How do I monitor the training process?

How do I detect (and avoid) overfitting?

What are common options to improve the model performance?

Objectives

Explain the importance of splitting the training data

Use the data splits to plot the training process

Set the training goal for your deep neural network

Measure the performance of your deep neural network

Interpret the training plots to recognize overfitting

Implement basic strategies to prevent overfitting

Import & explore the data

Import dataset

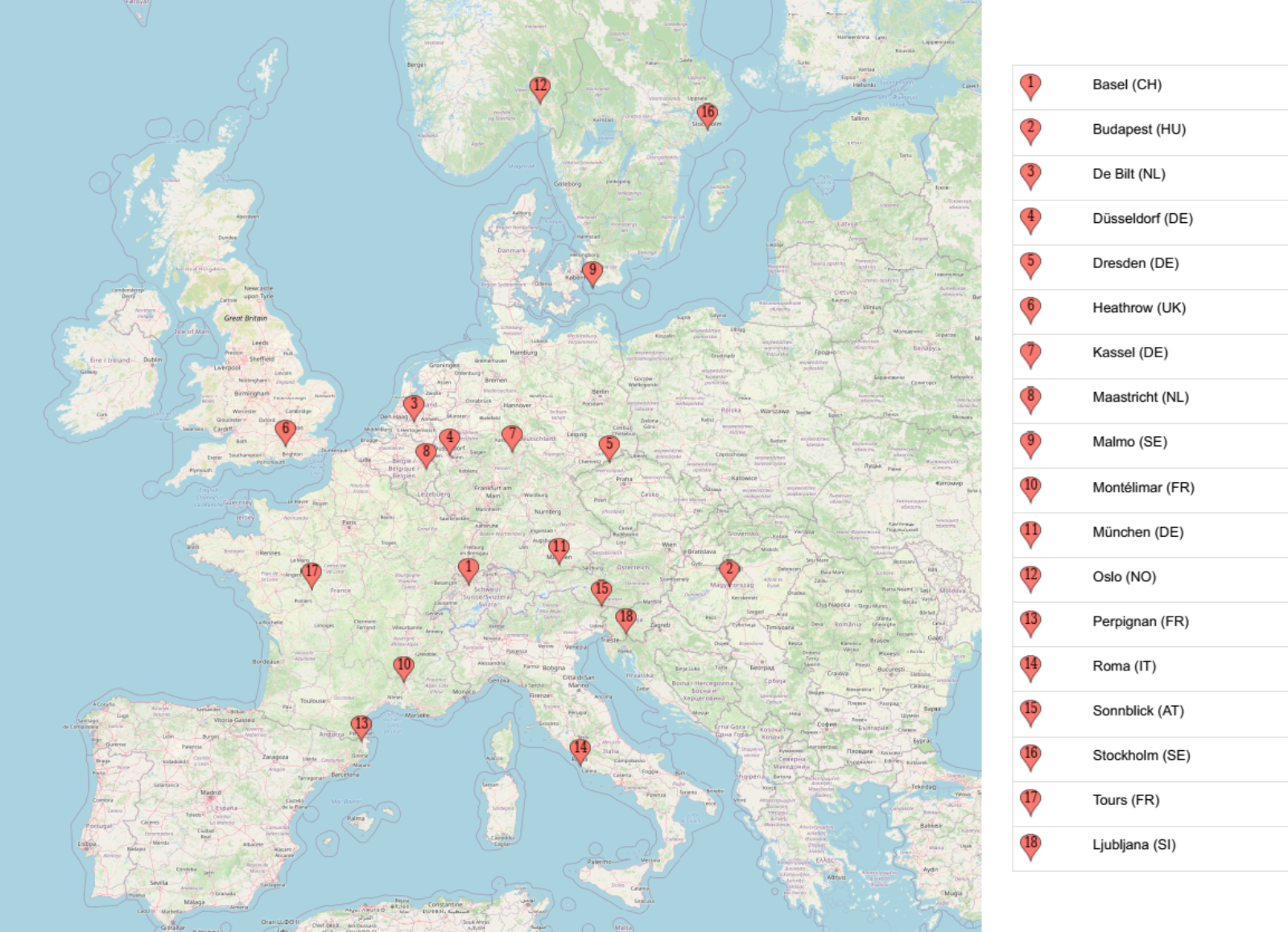

Here we want to work with the weather prediction dataset (the light version) which can be

downloaded from Zenodo.

It contains daily weather observations from 11 different European cities or places through the

years 2000 to 2010. For all locations the data contains the variables ‘mean temperature’, ‘max temperature’, and ‘min temperature’. In addition, for multiple of the following variables are provided: ‘cloud_cover’, ‘wind_speed’, ‘wind_gust’, ‘humidity’, ‘pressure’, ‘global_radiation’, ‘precipitation’, ‘sunshine’, but not all of them are provided for all locations. A more extensive description of the dataset including the different physical units is given in accompanying metadata file.

import pandas as pd

filename_data = "weather_prediction_dataset_light.csv"

data = pd.read_csv(filename_data)

data.head()

| DATE | MONTH | BASEL_cloud_cover | BASEL_humidity | BASEL_pressure | … | |

|---|---|---|---|---|---|---|

| 0 | 20000101 | 1 | 8 | 0.89 | 1.0286 | … |

| 1 | 20000102 | 1 | 8 | 0.87 | 1.0318 | … |

| 2 | 20000103 | 1 | 5 | 0.81 | 1.0314 | … |

| 3 | 20000104 | 1 | 7 | 0.79 | 1.0262 | … |

| 4 | 20000105 | 1 | 5 | 0.90 | 1.0246 | … |

Load the data

If you have not downloaded the data yet, you can also load it directly from Zenodo:

data = pd.read_csv("https://zenodo.org/record/5071376/files/weather_prediction_dataset_light.csv?download=1")

Brief exploration of the data

Let us start with a quick look at the type of features that we find in the data.

data.columns

Index(['DATE', 'MONTH', 'BASEL_cloud_cover', 'BASEL_humidity',

'BASEL_pressure', 'BASEL_global_radiation', 'BASEL_precipitation',

'BASEL_sunshine', 'BASEL_temp_mean', 'BASEL_temp_min', 'BASEL_temp_max',

...

'SONNBLICK_temp_min', 'SONNBLICK_temp_max', 'TOURS_humidity',

'TOURS_pressure', 'TOURS_global_radiation', 'TOURS_precipitation',

'TOURS_temp_mean', 'TOURS_temp_min', 'TOURS_temp_max'],

dtype='object')

Exercise: Explore the dataset

Let’s get a quick idea of the dataset.

- How many data points do we have?

- How many features does the data have (don’t count month and date as a feature)?

- What are the different measured variabel types in the data and how many are there (humidity etc.) ?

Solution

data.shapeThis will give both the number of datapoints (3654) and the number of features (89 + month + date).

To see what type of features the data contains we could run something like:

print({x.split("_")[-1] for x in data.columns if x not in ["MONTH", "DATE"]}){'precipitation', 'max', 'radiation', 'humidity', 'sunshine', 'min', 'pressure', 'mean', 'cover'}An alternative way which is slightly more complicated but gives better results is using regex.

import re feature_names = set() for col in data.columns: feature_names.update(re.findall('[^A-Z]{2,}', col)) feature_namesIn total there are 9 different measured variables.

Define the problem: Predict tomorrow’s sunshine hours

Select a subset and split into data (X) and labels (y)

The full dataset comprises 10 years (3654 days) from which we here will only select the first 3 years.

We will then define what exactly we want to predict from this data. A very common task with weather data is to make a prediction about the weather sometime in the future, say the next day. The present dataset is sorted by “DATE”, so for each row i in the table we can pick a corresponding feature and location from row i+1 that we later want to predict with our model.

Here we will pick a rather difficult-to-predict feature, sunshine hours, which we want to predict for the location: BASEL.

nr_rows = 365*3

# data

X_data = data.loc[:nr_rows].drop(columns=['DATE', 'MONTH'])

# labels (sunshine hours the next day)

y_data = data.loc[1:(nr_rows + 1)]["BASEL_sunshine"]

Prepare the data for machine learning

In general, it is important to check if the data contains any unexpected values such as 9999 or NaN or NoneType. You can use the pandas data.describe() function for this. If so, such values must be removed or replaced.

In the present case the data is luckily well prepared and shouldn’t contain such values, so that this step can be omitted.

Split data and labels into training, validation, and test set

As with classical machine learning techniques, it is required in deep learning to split off a hold-out test set which remains untouched during model training and tuning. It is later used to evaluate the model performance. On top, we will also split off an additional validation set, the reason of which will hopefully become clearer later in this lesson.

To make our lives a bit easier, we employ a trick to create these 3 datasets, training set, test set and validation set, by calling the train_test_split method of scikit-learn twice.

First we create the training set and leave the remainder of 30 % of the data to the two hold-out sets.

from sklearn.model_selection import train_test_split

X_train, X_holdout, y_train, y_holdout = train_test_split(X_data, y_data, test_size=0.3, random_state=0)

Now we split the 30 % of the data in two equal sized parts.

X_val, X_test, y_val, y_test = train_test_split(X_holdout, y_holdout, test_size=0.5, random_state=0)

Setting the random_state to 0 is a short-hand at this point. Note however, that changing this seed of the pseudo-random number generator will also change the composition of your data sets. For the sake of reproducibility, this is one example of a parameters that should not change at all.

Exercise: Split data into training, validation, and test set

We have been rather generous at selecting rows from the dataset. Our holdout set above amounts to almost an entire year of data. How would the code need to be rewritten in order to obtain two months of data for the validation and test set each?

X_train, X_holdout ... = train_test_split( ..., test_size = .12, ...)

X_val, X_test ... = train_test_split( ..., test_size = 2, ...)

X_train, X_holdout ... = train_test_split( ..., test_size = .33, ...)

X_val, X_test ... = train_test_split( ..., test_size = .33, ...)

X_train, X_holdout ... = train_test_split( ..., test_size = (4./36.), ...)

X_val, X_test ... = train_test_split( ..., test_size = .5, ...)

X_train, X_holdout ... = train_test_split( ..., test_size = 365, ...)

X_val, X_test ... = train_test_split( ..., test_size = .5, ...)Solution

In the code above, we selected the first

365*3 = 1095days from the original dataset as the number of rows to use. This is the total number of days we have in our dataset here.

The first

test_size = .12would leave.12*3*365for the holdout set. This would amount to 131 days or 4.32 months. This is more than we need. Take caution as well with the secondtest_size = 2. According to the API reference oftrain_test_split, this would select only 2 days intoX_test.The first

test_size = .33would leave.33*3*365for the holdout set. This would amount to 361 days or almost 12 months. This is more than we need.The first

test_size = (4./36.)would leave.11*3*365for the holdout set. This would amount to 4 out of 36 months. This is exactly than we need. With the subsequenttest_size = .5we obtain 2 months of data into the validation and into the test set each.The first

test_size = 365selects 365 rows or days into the holdout. This would be too many for the task at hand.

Build a dense neural network

Regression and classification - how to set a training goal

In episode 2 we trained a dense neural network on a classification task. For this one hot encoding was used together with a Categorical Crossentropy loss function.

This measured how close the distribution of the neural network outputs corresponds to the distribution of the three values in the one hot encoding.

Now we want to work on a regression task, thus not predicting a class label (or integer number) for a datapoint. In regression, we like to predict one (and sometimes many) values of a feature. This is typically a floating point number.

In our example we want to predict the sunshine hours in Basel (or any other place in the dataset) for tomorrow based on the weather data of all 18 locations today. BASEL_sunshine is a floating point value (i.e. float64). The network should hence output a single float value which is why the last layer of our network will only consist of a single node.

We compose a network of two hidden layers to start off with something. We go by a scheme with 100 neurons in the first hidden layer and 50 neurons in the second layer. As activation function we settle on the relu function as a it proved very robust and widely used. To make our live easier later, we wrap the definition of the network in a method called create_nn.

from tensorflow import keras

def create_nn():

# Input layer

inputs = keras.Input(shape=(X_data.shape[1],), name='input')

# Dense layers

layers_dense = keras.layers.Dense(100, 'relu')(inputs)

layers_dense = keras.layers.Dense(50, 'relu')(layers_dense)

# Output layer

outputs = keras.layers.Dense(1)(layers_dense)

return keras.Model(inputs=inputs, outputs=outputs, name="weather_prediction_model")

model = create_nn()

The shape of the input layer has to correspond to the number of features in our data: 89. We use X_data.shape[1] to obtain this value dynamically

The output layer here is a dense layer with only 1 node. And we here have chosen to use no activation function. While we might use softmax for a classification task, here we do not want to restrict the possible outcomes for a start.

In addition, we have here chosen to write the network creation as a function so that we can use it later again to initiate new models.

Let’s check how our model looks like by calling the summary method.

model.summary()

Model: "weather_prediction_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input (InputLayer) [(None, 89)] 0

_________________________________________________________________

dense (Dense) (None, 100) 9000

_________________________________________________________________

dense_1 (Dense) (None, 50) 5050

_________________________________________________________________

dense_2 (Dense) (None, 1) 51

=================================================================

Total params: 14,101

Trainable params: 14,101

Non-trainable params: 0

When compiling the model we can define a few very important aspects. We will discuss them now in more detail.

Loss function

The loss is what the neural network will be optimized on during training, so choosing a suitable loss function is crucial for training neural networks.

In the given case we want to stimulate that the predicted values are as close as possible to the true values. This is commonly done by using the mean squared error (mse) or the mean absolute error (mae), both of which should work OK in this case. Often, mse is preferred over mae because it “punishes” large prediction errors more severely.

In Keras this is implemented in the keras.losses.MeanSquaredError class (see Keras documentation: https://keras.io/api/losses/). This can be provided into the model.compile method with the loss parameter and setting it to mse, e.g.

model.compile(loss='mse')

Optimizer

Somewhat coupled to the loss function is the optimizer that we want to use. The optimizer here refers to the algorithm with which the model learns to optimize on the provided loss function. A basic example for such an optimizer would be stochastic gradient descent. For now, we can largely skip this step and pick one of the most common optimizers that works well for most tasks: the Adam optimizer. Similar to activation functions, the choice of optimizer depends on the problem you are trying to solve, your model architecture and your data. Adam is a good starting point though, which is why we chose it.

model.compile(optimizer='adam',

loss='mse')

Metrics

In our first example (episode 2) we plotted the progression of the loss during training.

That is indeed a good first indicator if things are working alright, i.e. if the loss is indeed decreasing as it should with the number of epochs.

However, when models become more complicated then also the loss functions often become less intuitive.

That is why it is good practice to monitor the training process with additional, more intuitive metrics.

They are not used to optimize the model, but are simply recorded during training.

With Keras such additional metrics can be added via metrics=[...] parameter and can contain one or multiple metrics of interest.

Here we could for instance chose to use 'mae' the mean absolute error, or the the root mean squared error (RMSE) which unlike the mse has the same units as the predicted values. For the sake of units, we choose the latter.

model.compile(optimizer='adam',

loss='mse',

metrics=[keras.metrics.RootMeanSquaredError()])

With this, we complete the compilation of our network and are ready to start training.

Train a dense neural network

Now that we created and compiled our dense neural network, we can start training it.

One additional concept we need to introduce though, is the batch_size.

This defines how many samples from the training data will be used to estimate the error gradient before the model weights are updated.

Larger batches will produce better, more accurate gradient estimates but also less frequent updates of the weights.

Here we are going to use a batch size of 32 which is a common starting point.

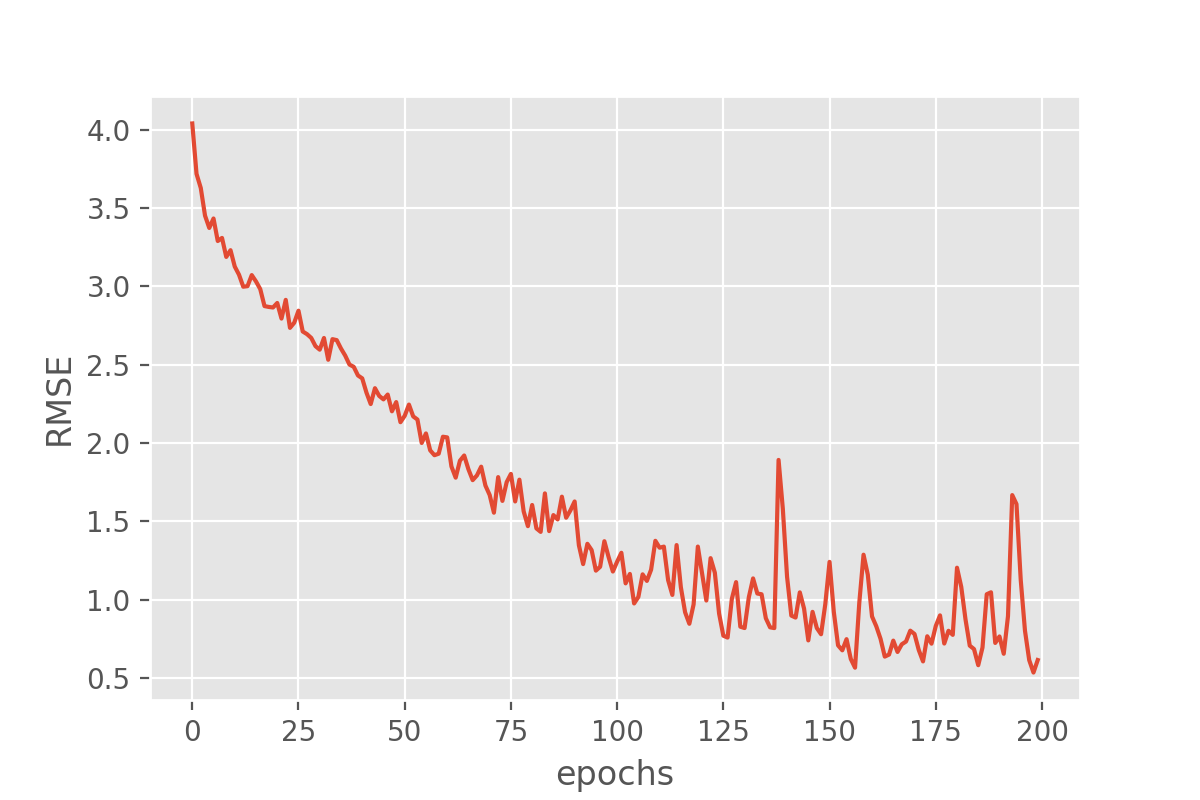

history = model.fit(X_train, y_train,

batch_size=32,

epochs=200,

verbose=2)

We can plot the training process using the history object returned from the model training:

import seaborn as sns

import matplotlib.pyplot as plt

history_df = pd.DataFrame.from_dict(history.history)

sns.lineplot(data=history_df['root_mean_squared_error'])

plt.xlabel("epochs")

plt.ylabel("RMSE")

This looks very promising! Our metric (“RMSE”) is dropping nicely and while it maybe keeps fluctuating a bit it does end up at fairly low RMSE values. But the RMSE is just the root mean squared error, so we might want to look a bit more in detail how well our just trained model does in predicting the sunshine hours.

Evaluate our model

There is not a single way to evaluate how a model performs. But there are at least two very common approaches. For a classification task that is to compute a confusion matrix for the test set which shows how often particular classes were predicted correctly or incorrectly.

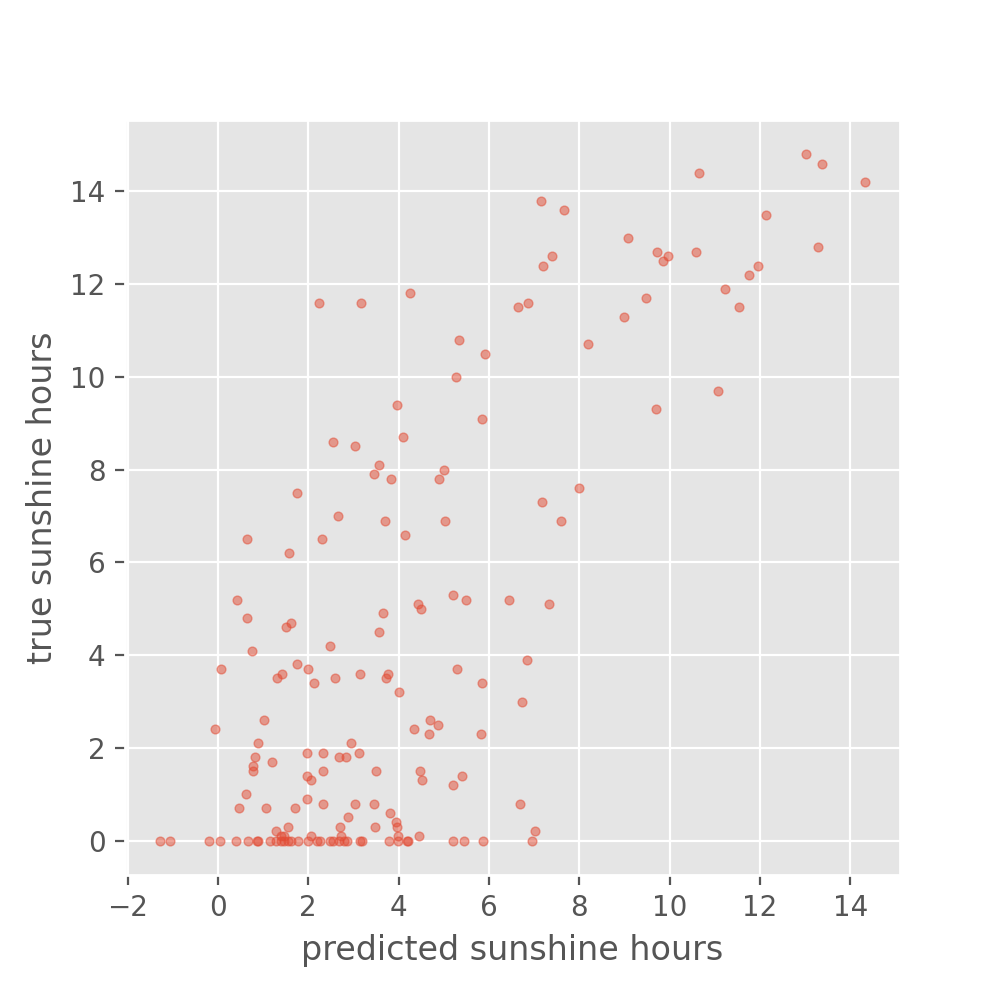

For the present regression task, it makes more sense to compare true and predicted values in a scatter plot. Hint: use plt.scatter().

First, we will do the actual prediction step.

y_train_predicted = model.predict(X_train)

y_test_predicted = model.predict(X_test)

So, let’s look at how the predicted sunshine hour have developed with reference to their ground truth values.

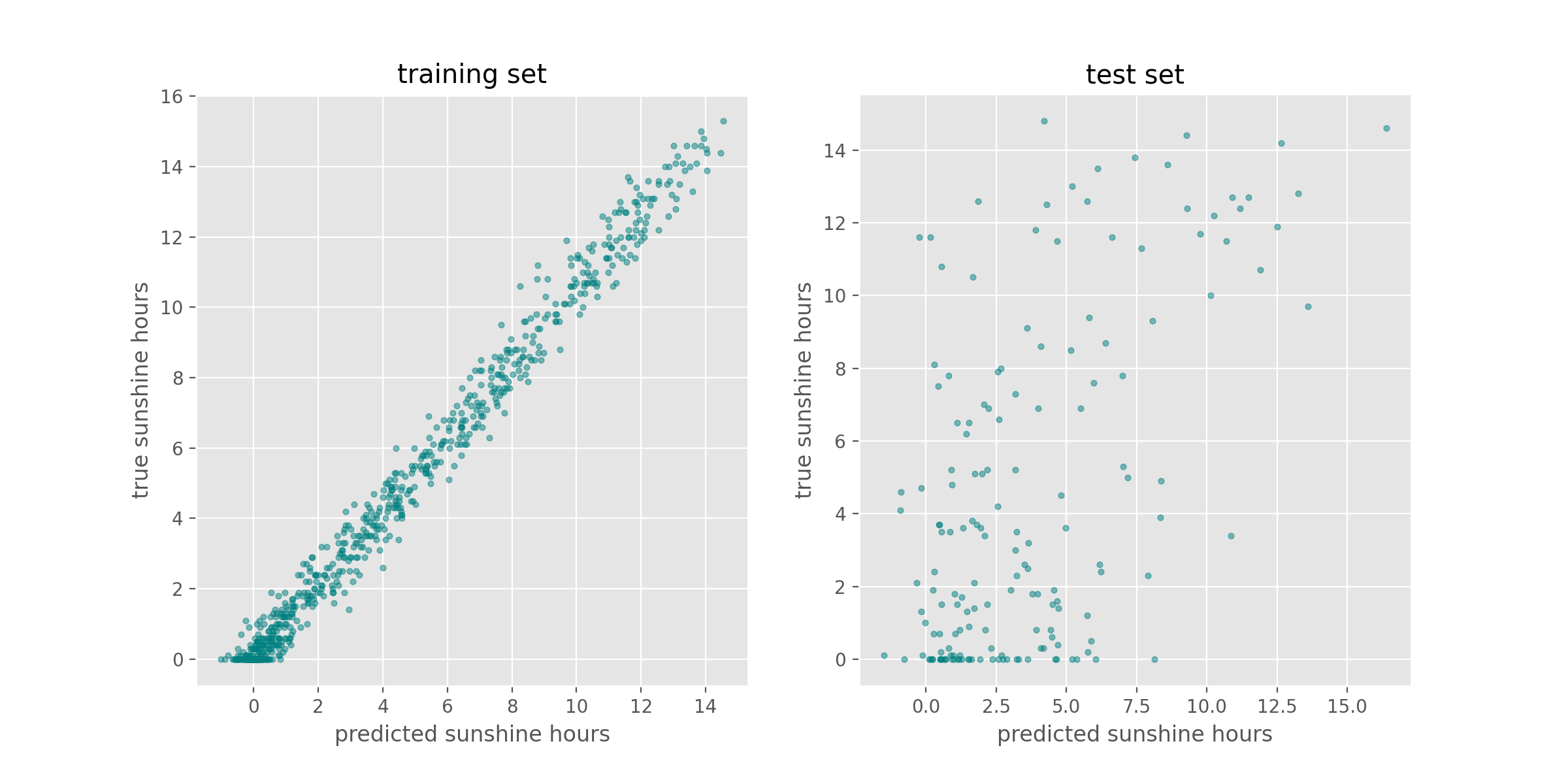

fig, axes = plt.subplots(1, 2, figsize=(12, 6))

plt.style.use('ggplot') # optional, that's only to define a visual style

axes[0].scatter(y_train_predicted, y_train, s=10, alpha=0.5, color="teal")

axes[0].set_title("training set")

axes[0].set_xlabel("predicted sunshine hours")

axes[0].set_ylabel("true sunshine hours")

axes[1].scatter(y_test_predicted, y_test, s=10, alpha=0.5, color="teal")

axes[1].set_title("test set")

axes[1].set_xlabel("predicted sunshine hours")

axes[1].set_ylabel("true sunshine hours")

The accuracy on the training set seems fairly good. In fact, considering that the task of predicting the daily sunshine hours is really not easy it might even be surprising how well the model predicts that (at least on the training set). Maybe a little too good? We also see the noticeable difference between train and test set when calculating the exact value of the RMSE:

loss_train, rmse_train = model.evaluate(X_train, y_train)

loss_test, rmse_test = model.evaluate(X_test, y_test)

print('Train RMSE: {:.2f}, Test RMSE: {:.2f}'.format(rmse_train, rmse_test))

24/24 [==============================] - 0s 442us/step - loss: 0.7092 - root_mean_squared_error: 0.8421

6/6 [==============================] - 0s 647us/step - loss: 16.4413 - root_mean_squared_error: 4.0548

Train RMSE: 0.84, Test RMSE: 4.05

For those experienced with (classical) machine learning this might look familiar. The plots above expose the signs of overfitting which means that the model has to some extent memorized aspects of the training data. As a result, it makes much more accurate predictions on the training data than on unseen test data.

Overfitting also happens in classical machine learning, but there it is usually interpreted as the model having more parameters than the training data would justify (say, a decision tree with too many branches for the number of training instances). As a consequence one would reduce the number of parameters to avoid overfitting. In deep learning the situation is slightly different. It can - as for classical machine learning - also be a sign of having a too big model, meaning a model with too many parameters (layers and/or nodes). However, in deep learning higher number of model parameters are often still considered acceptable and models often perform best (in terms of prediction accuracy) when they are at the verge of overfitting. So, in a way, training deep learning models is always a bit like playing with fire…

Set expectations: How difficult is the defined problem?

Before we dive deeper into handling overfitting and (trying to) improving the model performance, let’s ask the question: How well must a model perform before we consider it a good model?

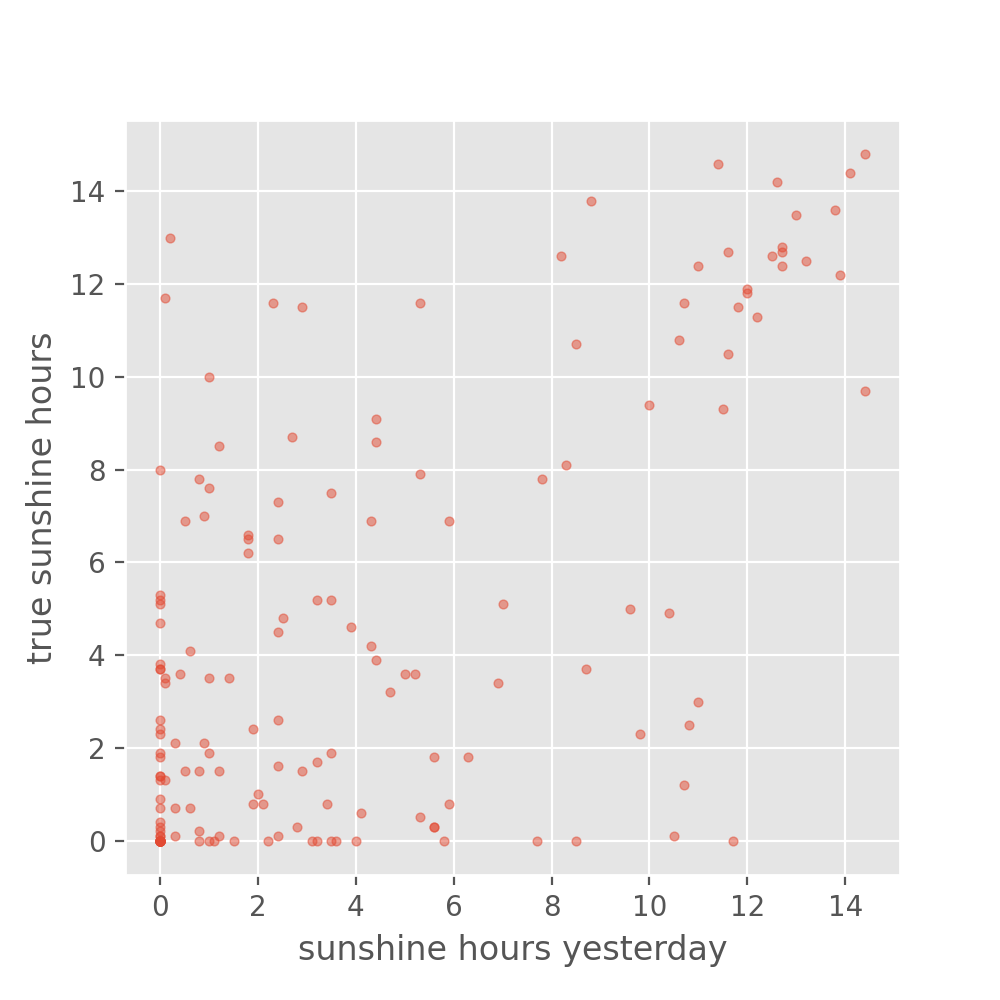

Now that we defined a problem (predict tomorrow’s sunshine hours), it makes sense to develop an intuition for how difficult the posed problem is. Frequently, models will be evaluated against a so called baseline. A baseline can be the current standard in the field or if such a thing does not exist it could also be an intuitive first guess or toy model. The latter is exactly what we would use for our case.

Maybe the simplest sunshine hour prediction we can easily do is: Tomorrow we will have the same number of sunshine hours as today. (sounds very naive, but for many observables such as temperature this is already a fairly good predictor)

We can take the BASEL_sunshine column of our data, because this contains the sunshine hours from one day before what we have as a label.

y_baseline_prediction = X_test['BASEL_sunshine']

plt.figure(figsize=(5, 5), dpi=100)

plt.scatter(y_baseline_prediction, y_test, s=10, alpha=0.5)

plt.xlabel("sunshine hours yesterday")

plt.ylabel("true sunshine hours")

It is difficult to interpret from this plot whether our model is doing better than the baseline. We can also have a look at the RMSE:

from sklearn.metrics import mean_squared_error

rmse_nn = mean_squared_error(y_test, y_test_predicted, squared=False)

rmse_baseline = mean_squared_error(y_test, y_baseline_prediction, squared=False)

print('NN RMSE: {:.2f}, baseline RMSE: {:.2f}'.format(rmse_nn, rmse_baseline))

NN RMSE: 4.05, baseline RMSE: 3.88

Judging from the numbers alone, our neural network preduction would be performing worse than the baseline.

Watch your model training closely

As we saw when comparing the predictions for the training and the test set, deep learning models are prone to overfitting. Instead of iterating through countless cycles of model trainings and subsequent evaluations with a reserved test set, it is common practice to work with a second split off dataset to monitor the model during training. This is the validation set which can be regarded as a second test set. As with the test set the datapoints of the validation set are not used for the actual model training itself. Instead we evaluate the model with the validation set after every epoch during training, for instance to spot if we see signs of clear overfitting.

Let’s give this a try!

We need to initiate a new model – otherwise Keras will simply assume that we want to continue training the model we already trained above.

model = create_nn()

model.compile(optimizer='adam',

loss='mse',

metrics=[keras.metrics.RootMeanSquaredError()])

But now we train it with the small addition of also passing it our validation set:

history = model.fit(X_train, y_train,

batch_size=32,

epochs=200,

validation_data=(X_val, y_val),

verbose=2)

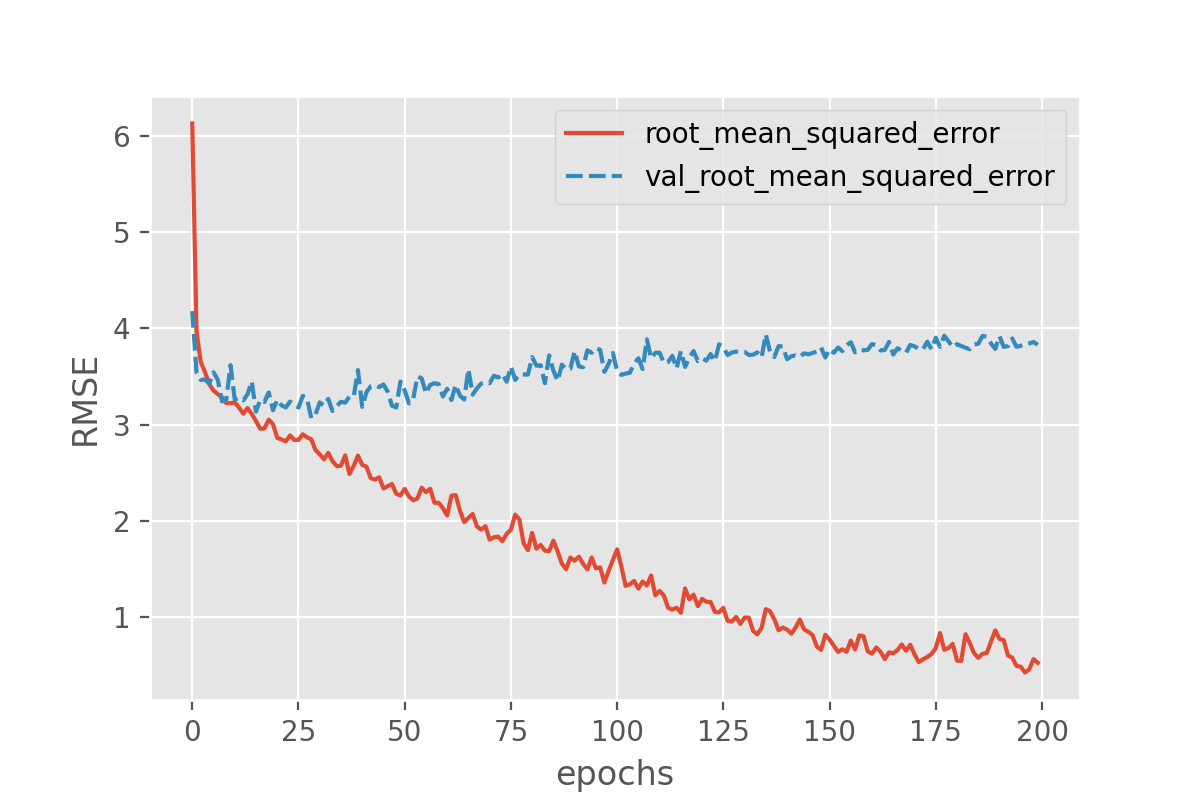

With this we can plot both the performance on the training data and on the validation data!

history_df = pd.DataFrame.from_dict(history.history)

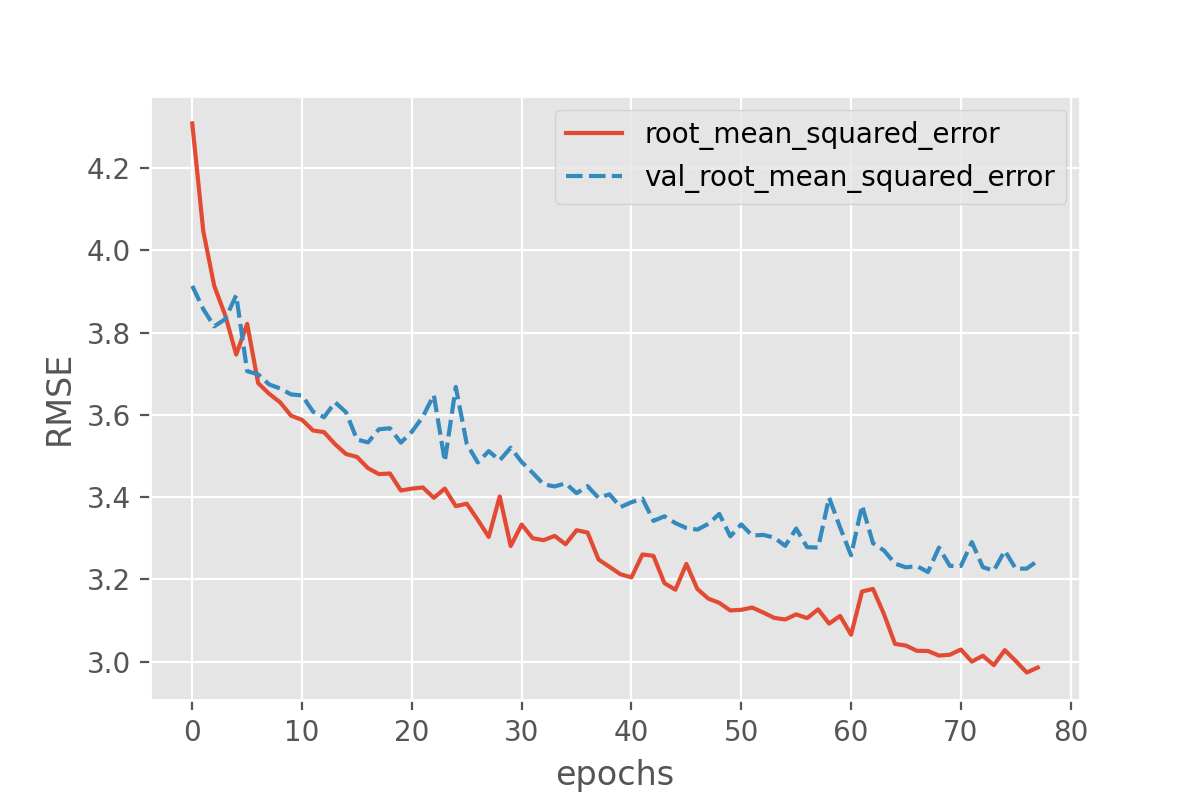

sns.lineplot(data=history_df[['root_mean_squared_error', 'val_root_mean_squared_error']])

plt.xlabel("epochs")

plt.ylabel("RMSE")

Exercise: plot the training progress.

Is there a difference between the training and validation data? And if so, what would this imply?

Solution

The difference between training and validation data shows that something is not completely right here. The model predictions on the validation set quickly seem to reach a plateau while the performance on the training set keeps improving. That is a common signature of overfitting.

Counteract model overfitting

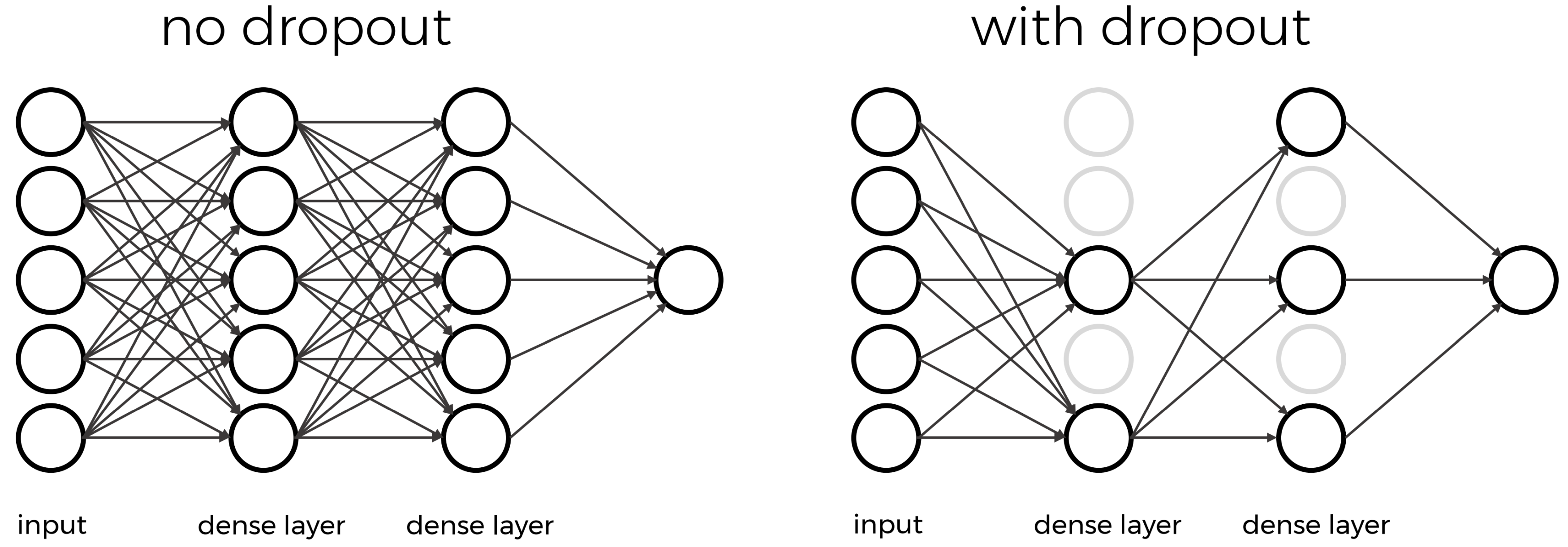

Overfitting is a very common issue and there are many strategies to handle it. Most similar to classical machine learning might to reduce the number of parameters.

We can keep the network architecture unchanged (2 dense layers + a one-node output layer) and only play with the number of nodes per layer.

def create_nn(nodes1, nodes2):

# Input layer

inputs = keras.layers.Input(shape=(X_data.shape[1],), name='input')

# Dense layers

layers_dense = keras.layers.Dense(nodes1, 'relu')(inputs)

layers_dense = keras.layers.Dense(nodes2, 'relu')(layers_dense)

# Output layer

outputs = keras.layers.Dense(1)(layers_dense)

return keras.Model(inputs=inputs, outputs=outputs, name="model_small")

model = create_nn(10, 5)

Let’s check the created model for good measure:

model.summary()

Model: "model_small"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input (InputLayer) [(None, 89)] 0

_________________________________________________________________

dense_9 (Dense) (None, 10) 900

_________________________________________________________________

dense_10 (Dense) (None, 5) 55

_________________________________________________________________

dense_11 (Dense) (None, 1) 6

=================================================================

Total params: 961

Trainable params: 961

Non-trainable params: 0

With this change, we have reduced the parameters by 92%. Now compile the model and run the training.

model.compile(optimizer='adam',

loss='mse',

metrics=[keras.metrics.RootMeanSquaredError()])

history = model.fit(X_train, y_train,

batch_size = 32,

epochs = 200,

validation_data=(X_val, y_val), verbose = 2)

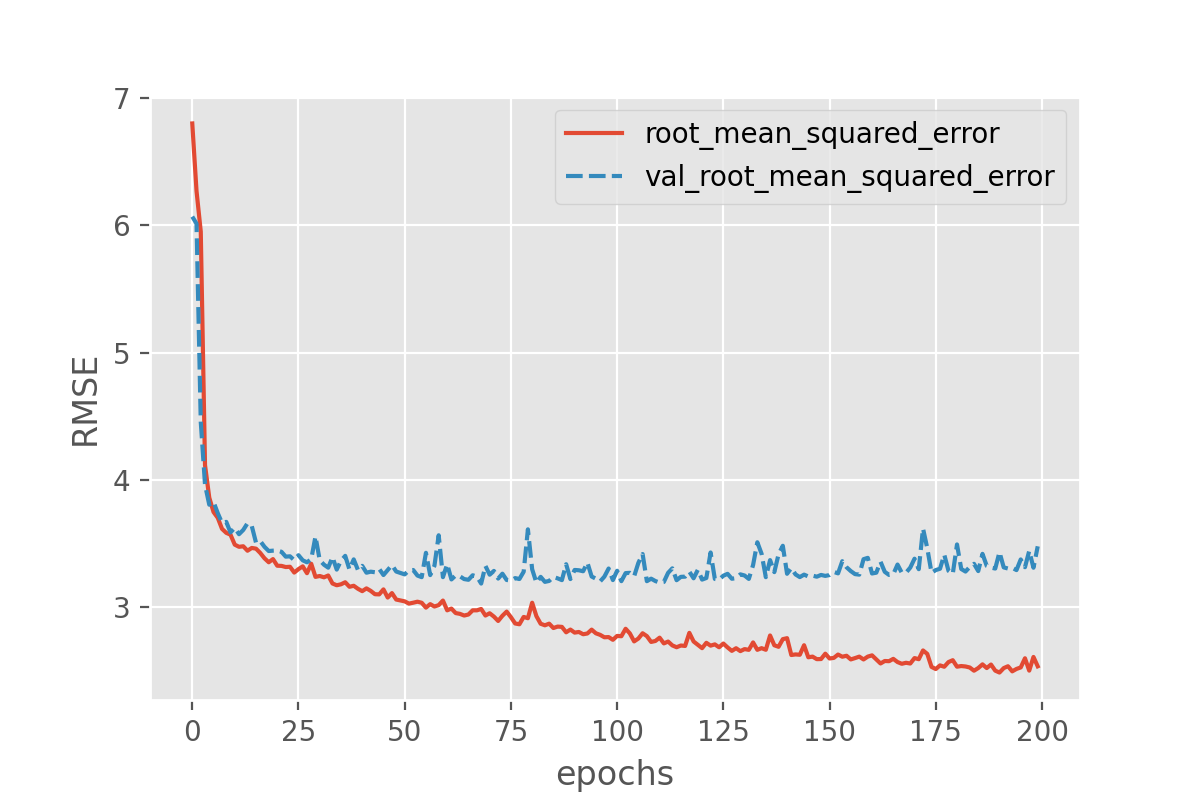

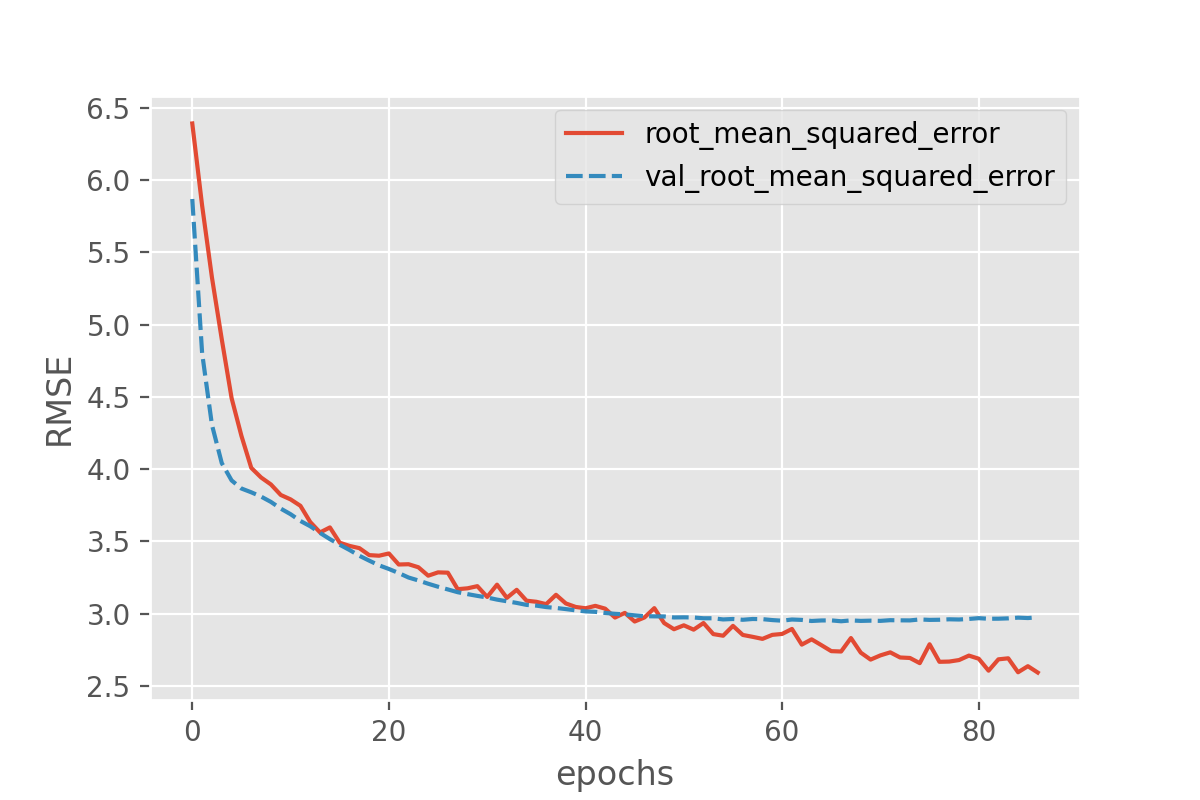

In order to compare, we use the same code to check the training performance.

history_df = pd.DataFrame.from_dict(history.history)

sns.lineplot(data=history_df[['root_mean_squared_error', 'val_root_mean_squared_error']])

plt.xlabel("epochs")

plt.ylabel("RMSE")

We saw that reducing the number of parameters can be a strategy to avoid overfitting. In practice, however, this is usually not the (main) way to go when it comes to deep learning. One reason is, that finding the sweet spot can be really hard and time consuming. And it has to be repeated every time the model is adapted, e.g. when more training data becomes available.

Sweet Spots

Note: There is no single correct solution here. But you will have noticed that the number of nodes can be reduced quite a bit! In general, it quickly becomes a very complicated search for the right “sweet spot”, i.e. the settings for which overfitting will be (nearly) avoided but which still performes equally well.

Early stopping: stop when things are looking best

Arguable the most common technique to avoid (severe) overfitting in deep learning is called early stopping. As the name suggests, this technique just means that you stop the model training if things do not seem to improve anymore. More specifically, this usually means that the training is stopped if the validation loss does not (notably) improve anymore. Early stopping is both intuitive and effective to use, so it has become a standard addition for model training.

To better study the effect, we can now safely go back to models with many (too many?) parameters:

model = create_nn(100, 50)

model.compile(optimizer='adam',

loss='mse',

metrics=[keras.metrics.RootMeanSquaredError()])

To apply early stopping during training it is easiest to use Keras EarlyStopping class.

This allows to define the condition of when to stop training. In our case we will say when the validation loss is lowest.

However, since we have seen quite some fluctuation of the losses during training above we will also set patience=10 which means that the model will stop training of the validation loss has not gone down for 10 epochs.

from tensorflow.keras.callbacks import EarlyStopping

earlystopper = EarlyStopping(

monitor='val_loss',

patience=10,

verbose=1

)

history = model.fit(X_train, y_train,

batch_size = 32,

epochs = 200,

validation_data=(X_val, y_val),

callbacks=[earlystopper],

verbose = 2)

As before, we can plot the losses during training:

history_df = pd.DataFrame.from_dict(history.history)

sns.lineplot(data=history_df[['root_mean_squared_error', 'val_root_mean_squared_error']])

plt.xlabel("epochs")

plt.ylabel("RMSE")

This still seems to reveal the onset of overfitting, but the training stops before the discrepancy between training and validation loss can grow further. Despite avoiding severe cases of overfitting, early stopping has the additional advantage that the number of training epochs will be regulated automatically. Instead of comparing training runs for different number of epochs, early stopping allows to simply set the number of epochs to a desired maximum value.

What might be a bit unintuitive is that the training runs might now end very rapidly. This might spark the question: have we really reached an optimum yet? And often the answer to this is “no”, which is why early stopping frequently is combined with other approaches to avoid overfitting. Overfitting means that a model (seemingly) performs better on seen data compared to unseen data. One then often also says that it does not “generalize” well. Techniques to avoid overfitting, or to improve model generalization, are termed regularization techniques and we will come back to this in episode 4.

BatchNorm: the “standard scaler” for deep learning

A very common step in classical machine learning pipelines is to scale the features, for instance by using sckit-learn’s StandardScaler.

This can in principle also be done for deep learning.

An alternative, more common approach, is to add BatchNormalization layers (documentation of the batch normalization layer) which will learn how to scale the input values.

Similar to dropout, batch normalization is available as a network layer in Keras and can be added to the network in a similar way.

It does not require any additional parameter setting.

from tensorflow.keras.layers import BatchNormalization

The BatchNormalization can be inserted as yet another layer into the architecture.

def create_nn():

# Input layer

inputs = keras.layers.Input(shape=(X_data.shape[1],), name='input')

# Dense layers

layers_dense = keras.layers.BatchNormalization()(inputs)